Integrating Multi-Omics Data in Systems Biology: From Foundational Concepts to Clinical Applications

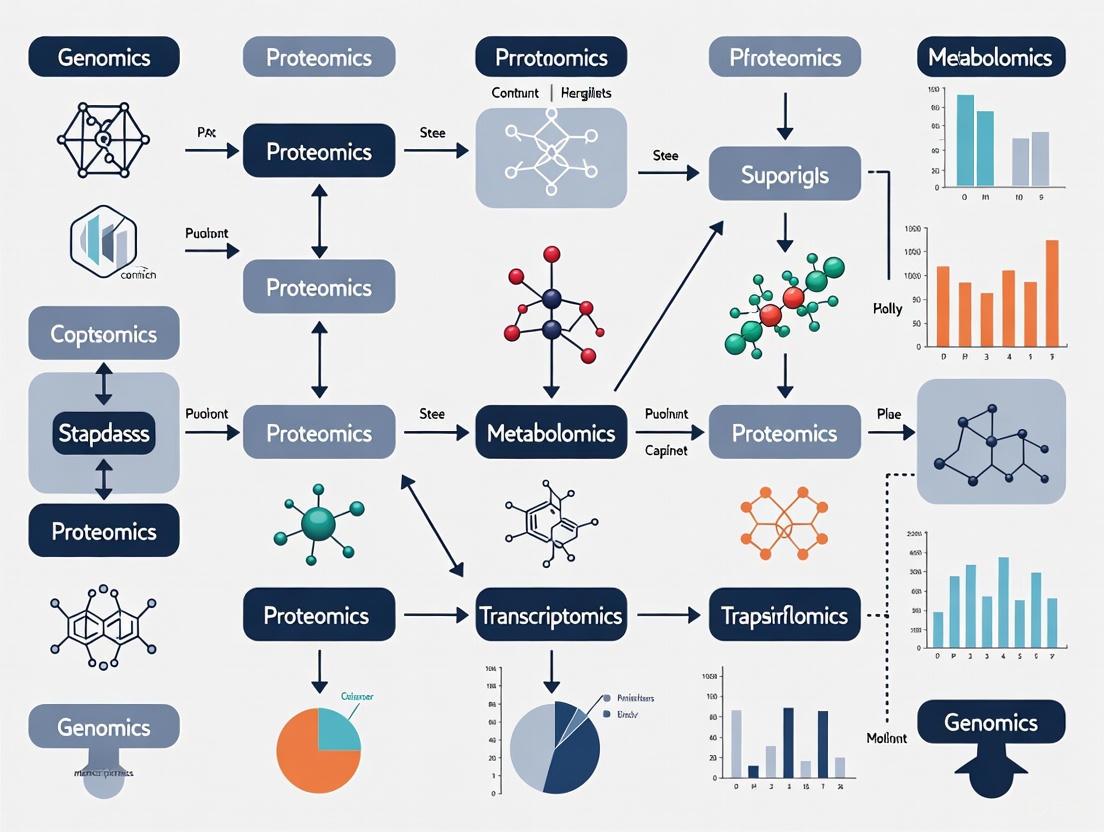

This article provides a comprehensive overview of the strategies and computational methods for integrating multi-omics data within a systems biology framework.

Integrating Multi-Omics Data in Systems Biology: From Foundational Concepts to Clinical Applications

Abstract

This article provides a comprehensive overview of the strategies and computational methods for integrating multi-omics data within a systems biology framework. Aimed at researchers, scientists, and drug development professionals, it explores the foundational principles of biological networks, details cutting-edge methodological approaches including AI and graph neural networks, and addresses critical challenges in data harmonization and computational scalability. Further, it validates these strategies through comparative analysis of their performance in real-world applications like drug discovery and precision medicine, offering a roadmap for translating complex datasets into actionable biological insights and therapeutic breakthroughs.

The Systems Biology Foundation: Unraveling Biological Complexity with Multi-Omics Networks

From Single-Omics Silos to a Holistic Multi-Omics View in Systems Biology

The study of biological systems has evolved from a reductionist approach, focusing on individual molecular components, to a holistic perspective that considers the complex interactions within entire systems. This paradigm shift has been propelled by the advent of omics technologies, which enable comprehensive profiling of cellular molecules at various levels, including the genome, transcriptome, proteome, metabolome, and epigenome [1]. While stand-alone omics approaches provide valuable insights into specific molecular layers, they offer a restricted viewpoint and lack the necessary information for a complete understanding of dynamic biological processes [1]. Multi-omics integration addresses this limitation by simultaneously examining different molecular layers to provide a holistic view of the biological system, thereby unraveling the relationships between different biomolecules and their interactions [1].

In systems biology, the integration of multi-omics data is fundamental for constructing comprehensive models of disease mechanisms, identifying potential diagnostic markers and therapeutic targets, and understanding the complex network of biological pathways involved in disease etiology and progression [1] [2]. Biological systems experience complex biochemical processes involving thousands of molecules, and multi-omics approaches can shed light on the fundamental causes of diseases, their functional repercussions, and pertinent interactions [1]. By enabling a systems-level analysis, multi-omics integration facilitates the identification of key regulatory nodes and pathways that could be targeted for intervention, paving the way for personalized medicine and improved healthcare outcomes [1].

Multi-Omics Integration Strategies and Methodologies

Computational Frameworks for Integration

The integration of multi-omics data presents significant computational challenges due to the inherent differences in data structure, scale, and noise characteristics across different omics layers [3] [2]. Sophisticated computational tools and methodologies have been developed to address these challenges, which can be broadly categorized based on the nature of the data being integrated and the underlying algorithmic approaches [3].

A key distinction in integration strategies is whether the tool is designed for matched (profiled from the same cell) or unmatched (profiled from different cells) multi-omics data [3]. Matched integration, also known as vertical integration, leverages the cell itself as an anchor to bring different omics layers together [3]. In contrast, unmatched or diagonal integration requires projecting cells into a co-embedded space or non-linear manifold to find commonality between cells across different omics measurements [3].

Table 1: Categorization of Multi-Omics Integration Tools

| Integration Type | Tool Name | Year | Methodology | Omic Modalities |

|---|---|---|---|---|

| Matched Integration | Seurat v4 | 2020 | Weighted nearest-neighbour | mRNA, spatial coordinates, protein, accessible chromatin, microRNA [3] |

| MOFA+ | 2020 | Factor analysis | mRNA, DNA methylation, chromatin accessibility [3] | |

| totalVI | 2020 | Deep generative | mRNA, protein [3] | |

| SCENIC+ | 2022 | Unsupervised identification model | mRNA, chromatin accessibility [3] | |

| Unmatched Integration | Seurat v3 | 2019 | Canonical correlation analysis | mRNA, chromatin accessibility, protein, spatial [3] |

| GLUE | 2022 | Variational autoencoders | Chromatin accessibility, DNA methylation, mRNA [3] | |

| LIGER | 2019 | Integrative non-negative matrix factorization | mRNA, DNA methylation [3] | |

| StabMap | 2022 | Mosaic data integration | mRNA, chromatin accessibility [3] |

From a methodological perspective, integration approaches can be classified into early (concatenation-based), intermediate (transformation-based), and late (model-based) integration [4]. Early integration involves combining raw datasets from multiple omics upfront, while intermediate integration transforms individual omics data into lower-dimensional representations before integration. Late integration involves analyzing each omics dataset separately and then combining the results [5] [4]. Each approach has distinct advantages and limitations concerning its ability to capture interactions between omics layers and its computational complexity.

Protocol for Multi-Omics Integration

A comprehensive protocol for multi-omics integration involves a systematic process from initial problem formulation to biological interpretation of results [5]. The following workflow outlines the key steps:

Experimental Design and Sample Preparation

A high-quality, well-thought-out experimental design is paramount for successful multi-omics studies [2]. This includes careful consideration of samples or sample types, selection of appropriate controls, management of external variables, required sample biomass, number of biological and technical replicates, and sample preparation and storage protocols [2]. Ideally, multi-omics data should be generated from the same set of samples to allow for direct comparison under identical conditions, though this is not always feasible due to limitations in sample biomass, access, or financial resources [2].

Sample collection, processing, and storage requirements must be carefully considered as they significantly impact the quality and compatibility of multi-omics data. For instance, blood, plasma, or tissues are excellent bio-matrices for generating multi-omics data as they can be quickly processed and frozen to prevent rapid degradation of RNA and metabolites [2]. In contrast, formalin-fixed paraffin-embedded (FFPE) tissues are compatible with genomic studies but are traditionally incompatible with transcriptomics and proteomics due to formalin-induced RNA degradation and protein cross-linking [2].

Data Generation and Preprocessing

Multi-omics data generation leverages high-throughput technologies such as next-generation sequencing for genomics and transcriptomics, and mass spectrometry-based approaches for proteomics, metabolomics, and lipidomics [1]. Recent technological advances have enabled single-cell and spatial resolution across various omics layers, providing unprecedented insights into cellular heterogeneity and spatial organization [6] [1].

Data preprocessing and quality control are critical steps that include normalization, batch effect correction, missing value imputation, and feature selection. Each omics dataset has unique characteristics requiring modality-specific preprocessing approaches. For example, single-cell RNA-seq data requires specific normalization and scaling to account for varying sequencing depth across cells, while proteomics data may require normalization based on total ion current or reference samples [3] [6].

Integration Method Selection and Biological Interpretation

The selection of an appropriate integration method depends on multiple factors, including the experimental design (matched vs. unmatched), data types, biological question, and computational resources. As shown in Table 1, various tools are optimized for specific data configurations and analytical tasks.

Following integration, biological interpretation involves extracting meaningful insights from the integrated data. This may include identifying multi-omics biomarkers, elucidating regulatory networks, or uncovering novel biological mechanisms. Pathway analysis, gene set enrichment analysis, and network-based approaches are commonly used for biological interpretation [7] [8].

Successful multi-omics studies require both wet-lab reagents for experimental work and computational resources for data analysis. The following table outlines key components of the multi-omics toolkit.

Table 2: Essential Research Reagent Solutions and Computational Tools for Multi-Omics Studies

| Category | Item | Function/Application |

|---|---|---|

| Wet-Lab Reagents | Single-cell isolation reagents (MACS, FACS) | High-throughput cell separation for single-cell omics studies [6] |

| Cell barcoding reagents | Enables multiplexing of samples in single-cell sequencing workflows [6] | |

| Whole-genome amplification kits | Amplifies picogram quantities of DNA from single cells for genomic analysis [6] | |

| Template-switching oligos (TSOs) | Facilitates full-length cDNA library construction in scRNA-seq [6] | |

| Mass spectrometry reagents | Enables high-throughput proteomic, metabolomic, and lipidomic profiling [1] | |

| Computational Tools | Seurat suite | Comprehensive toolkit for single-cell multi-omics integration and analysis [3] |

| MOFA+ | Factor analysis framework for multi-omics data integration [3] | |

| MINGLE | Network-based integration and visualization of multi-omics data [7] [8] | |

| Flexynesis | Deep learning toolkit for bulk multi-omics data integration [9] | |

| scGPT | Foundation model for single-cell multi-omics analysis [10] |

Application Note: Network-Based Integration in Glioma Research

Gliomas are highly heterogeneous tumors with generally poor prognoses. Leveraging multi-omics data and network analysis holds great promise in uncovering crucial signatures and molecular relationships that elucidate glioma heterogeneity [7] [8]. This application note describes a comprehensive framework for identifying glioma-type-specific biomarkers through innovative variable selection and integrated network visualization using MINGLE (Multi-omics Integrated Network for GraphicaL Exploration) [8].

Methodology and Workflow

The MINGLE framework employs a two-step approach for variable selection using sparse network estimation across various omics datasets, followed by integration of distinct multi-omics information into a single network [8]. The workflow enables the identification of underlying relations through innovative integrated visualization, facilitating the discovery of molecular relationships that reflect glioma heterogeneity [8].

Experimental Protocol

Sample Preparation and Data Generation

- Patient Cohort Selection: Group patients based on the latest glioma classification guidelines [8].

- Sample Collection: Collect tumor tissues and matched normal controls where possible, with rapid freezing to preserve biomolecular integrity [2].

- Multi-Omics Profiling:

- Genomics: Perform whole-genome or whole-exome sequencing to identify genetic variants [1].

- Transcriptomics: Conduct RNA-seq to profile gene expression patterns [1].

- Epigenomics: Implement ATAC-seq or DNA methylation profiling to assess chromatin accessibility and methylation states [3].

- Proteomics: Employ mass spectrometry-based proteomics to quantify protein abundance [1].

Data Preprocessing

- Genomics: Variant calling, annotation, and filtering using standardized pipelines.

- Transcriptomics: Quality control, adapter trimming, read alignment, and gene expression quantification.

- Epigenomics: Peak calling for ATAC-seq data or beta-value calculation for methylation data.

- Proteomics: Peak detection, alignment, and normalization using specialized proteomics software.

Network Integration and Visualization with MINGLE

- Input Data Preparation: Format preprocessed omics data into appropriate matrices for MINGLE input [8].

- Variable Selection: Apply sparse network estimation to identify significant variables across omics datasets [8].

- Network Integration: Execute MINGLE to merge distinct multi-omics information into a single network [8].

- Visualization and Interpretation: Utilize MINGLE's visualization capabilities to explore integrated networks and identify biologically relevant patterns [8].

Key Findings and Applications

The application of MINGLE to glioma multi-omics data led to the identification of variables potentially serving as glioma-type-specific biomarkers [8]. The integration of multi-omics data into a single network facilitated the discovery of molecular relationships that reflect glioma heterogeneity, supporting biological interpretation and potentially informing therapeutic strategies [8]. The framework successfully identified subnetworks of genes and their products associated with different glioma types, with these biomarkers showing alignment with glioma type stratification and patient survival outcomes [7].

Emerging Trends and Future Perspectives

The field of multi-omics integration is rapidly evolving, with several emerging trends shaping its future trajectory. Foundation models, originally developed for natural language processing, are now transforming single-cell omics analysis [10]. Models such as scGPT, pretrained on over 33 million cells, demonstrate exceptional cross-task generalization capabilities, enabling zero-shot cell type annotation and perturbation response prediction [10]. Similarly, multimodal integration approaches, including pathology-aligned embeddings and tensor-based fusion, harmonize transcriptomic, epigenomic, proteomic, and spatial imaging data to delineate multilayered regulatory networks across biological scales [10].

Another significant advancement is the development of comprehensive toolkits like Flexynesis, which streamlines deep learning-based bulk multi-omics data integration for precision oncology [9]. Flexynesis provides modular architectures for various modeling tasks, including regression, classification, and survival analysis, making deep learning approaches more accessible to researchers with varying computational expertise [9].

As the field progresses, challenges remain in technical variability across platforms, limited model interpretability, and gaps in translating computational insights into clinical applications [10]. Overcoming these hurdles will require standardized benchmarking, multimodal knowledge graphs, and collaborative frameworks that integrate artificial intelligence with domain expertise [10]. The continued development and refinement of multi-omics integration strategies will undoubtedly enhance our understanding of biological systems and accelerate the translation of research findings into clinical applications.

In systems biology, complex biological processes are understood not just by studying individual components, but by examining the intricate web of relationships between them. Biological networks provide a powerful framework for this integration, representing biological entities as nodes and their interactions as edges [11]. The rise of high-throughput technologies has significantly increased the availability of molecular data, making network-based approaches essential for tackling challenges in bioinformatics and multi-omics research [12]. Networks facilitate the modeling of complicated molecular mechanisms through graph theory, machine learning, and deep learning techniques, enabling researchers to move from a siloed view of omics data to a holistic, systems-level perspective [12].

Core network types used in multi-omics integration include Protein-Protein Interaction (PPI) networks, Gene Regulatory Networks (GRNs), and Metabolic Networks. Each network type captures a different layer of biological organization, and their integration allows researchers to reveal new cell subtypes, cell interactions, and interactions between different omic layers that lead to gene regulatory and phenotypic outcomes [3]. Since each omic layer is causally tied to the next, multi-omics integration serves to disentangle these relationships to properly capture cell phenotype [3].

Table 1: Core Biological Network Types in Multi-omics Integration

| Network Type | Nodes Represent | Edges Represent | Primary Function in Multi-omics Integration |

|---|---|---|---|

| Protein-Protein Interaction (PPI) | Proteins | Physical or functional interactions between proteins | Integrates proteomic data to reveal cellular functions and complexes |

| Gene Regulatory Network (GRN) | Genes | Regulatory interactions (e.g., transcription factor binding) | Connects genomic, epigenomic, and transcriptomic data to model expression control |

| Metabolic Network | Metabolites | Biochemical reactions | Integrates metabolomic data to model metabolic fluxes and pathways |

Graph Theory Foundations

Biological networks are computationally represented using graph theory principles. An undirected graph ( G ) is defined as a pair ( (V, E) ) where ( V ) is a set of vertices (nodes) and ( E ) is a set of edges (connections) between them [11]. In directed graphs, edges have direction, representing information flow or causal relationships, which is particularly useful for regulatory and metabolic pathways [11]. Weighted graphs assign numerical values to edges, often representing the strength, reliability, or type of interaction, which is crucial for capturing the varying relevance of different biological connections [11].

High-quality data resources are essential for constructing biological networks. Experimental methods for PPI data include yeast two-hybrid (Y2H) systems, tandem affinity purification (TAP), and mass spectrometry [11]. For GRNs, protein-DNA interaction data can be sourced from databases like JASPAR and TRANSFAC [11]. Metabolic networks often leverage databases such as the Kyoto Encyclopedia of Genes and Genomes (KEGG) and BioCyc [11].

Common computational formats for representing biological networks include:

- SBML (Systems Biology Markup Language): An XML-based format for representing models of biological processes [11]

- PSI-MI (Proteomics Standards Initiative Interaction): Standard format for representing molecular interactions [11]

- BioPAX: Language for representing biological pathways [11]

Table 2: Key Databases for Biological Network Construction

| Database | Network Type | Key Features | URL |

|---|---|---|---|

| BioGRID | PPI | Curated PPI data for multiple model organisms | https://thebiogrid.org [12] |

| DrugBank | Drug-Target | Drug structures, target information, and drug-drug interactions | https://www.drugbank.ca [12] |

| KEGG | Metabolic | Comprehensive pathway database for multiple organisms | https://www.genome.jp/kegg/ [12] |

| DREAM | GRN | Gene expression time series and ground truth network structures | http://gnw.sourceforge.net [12] |

| STRING | PPI | Includes both physical and functional associations | https://string-db.org [11] |

Protein-Protein Interaction Networks

Application Notes

Protein-Protein Interaction (PPI) networks model the physical and functional relationships between proteins within a cell [12]. In these networks, nodes correspond to proteins, while edges define interactions between them [12]. PPIs are essential for almost all cellular functions, ranging from the assembly of structural components to processes such as transcription, translation, and active transport [12]. In multi-omics integration, PPI networks serve as a crucial framework for integrating proteomic data with other omics layers, helping to place genomic variants and transcriptomic changes in the context of functional protein complexes and cellular machinery.

The integration of PPI networks with other data types enables researchers to predict protein function, identify key regulatory hubs, and understand how perturbations in one molecular layer affect protein complexes and cellular functions. For example, changes in gene expression revealed by transcriptomics can be contextualized within protein interaction networks to identify disrupted complexes or pathways in disease states.

Experimental Protocol: Constructing and Analyzing PPI Networks

Objective: Build a context-specific PPI network integrated with transcriptomic data to identify dysregulated complexes in a disease condition.

Workflow:

Materials and Reagents:

- High-quality PPI Database (e.g., BioGRID, STRING): Provides curated protein interaction data

- RNA-seq Data: Case and control transcriptomic profiles

- Network Analysis Software: Cytoscape for visualization and analysis

- Statistical Environment: R or Python with network analysis libraries (igraph, NetworkX)

Procedure:

- Data Collection: Download PPI data for your organism of interest from BioGRID or STRING. Simultaneously, prepare transcriptomic data (RNA-seq) from disease and control samples.

- Network Filtering: Filter the PPI network to include only proteins expressed in your system of interest (e.g., detected in transcriptomic data).

- Data Integration: Map transcriptomic changes (fold-change, p-values) onto the corresponding nodes in the PPI network.

- Topological Analysis: Calculate network centrality measures (degree, betweenness centrality) for each node to identify hub proteins.

- Module Detection: Use community detection algorithms (e.g., Louvain method) to identify densely connected protein complexes.

- Differential Scoring: Combine topological importance and expression changes to prioritize key proteins and complexes.

- Functional Enrichment: Perform Gene Ontology and pathway enrichment analysis on significant modules using tools like clusterProfiler or Enrichr.

Validation:

- Compare identified hubs with known essential genes from databases like OGEE

- Validate key interactions using co-immunoprecipitation followed by western blotting

- Use orthogonal datasets (e.g., phosphoproteomics) to confirm regulatory importance

Gene Regulatory Networks

Application Notes

Gene Regulatory Networks (GRNs) represent the complex mechanisms that control gene expression in cells [12]. In GRNs, nodes represent genes, and directed edges represent regulatory interactions where one gene directly regulates the expression of another [12]. These networks naturally integrate genomic, epigenomic, and transcriptomic data, as transcription factor binding (often assessed through ChIP-seq) represents one layer, chromatin accessibility (ATAC-seq) another, and resulting expression changes (RNA-seq) a third.

GRNs are particularly valuable for understanding cell identity, differentiation processes, and transcriptional responses to stimuli. The structure of GRNs often reveals key transcription factors that function as master regulators of specific cell states or pathological conditions. In multi-omics integration, GRNs provide a framework for understanding how genetic variation and epigenetic modifications ultimately translate to changes in gene expression programs.

Experimental Protocol: Constructing GRNs from Multi-omics Data

Objective: Build a context-specific GRN by integrating ATAC-seq (epigenomics) and RNA-seq (transcriptomics) data to identify master regulators in cell differentiation.

Workflow:

Materials and Reagents:

- ATAC-seq Data: Chromatin accessibility profiles across conditions

- RNA-seq Data: Matched transcriptomic data

- TF Motif Databases: JASPAR or TRANSFAC for transcription factor binding motifs

- Computational Tools: SCENIC+ for GRN inference

- Validation Reagents: Antibodies for ChIP-seq validation of key TF binding

Procedure:

- Data Preprocessing: Process ATAC-seq data to identify accessible chromatin regions (peaks) using tools like MACS2. Process RNA-seq data to quantify gene expression.

- Region-to-Gene Mapping: Assign accessible regions to potential target genes based on genomic proximity (e.g., within 500kb of transcription start site) using tools like Cicero.

- TF Motif Analysis: Scan accessible regions for known transcription factor binding motifs using databases like JASPAR.

- TF Activity Inference: Identify transcription factors with both motif presence in accessible regions and correlated expression with potential targets using tools like SCENIC+.

- Network Construction: Build a directed network where edges represent predicted regulatory relationships, weighted by the strength of evidence (motif score, correlation strength).

- Topological Analysis: Identify network hubs with high out-degree (regulatory influence) as potential master regulators.

- Validation: Select top candidate regulators for experimental validation using CRISPR inhibition/activation followed by RNA-seq to confirm regulatory relationships.

Downstream Analysis:

- Compare GRN topology between conditions to identify rewired interactions

- Integrate with genetic data to map disease-associated variants onto regulatory nodes

- Use network centrality measures to prioritize key regulators as therapeutic targets

Metabolic Networks

Application Notes

Metabolic networks represent the complete set of metabolic reactions and pathways in a biological system [12]. In these networks, nodes represent metabolites, and edges represent biochemical reactions, typically labeled with the enzyme that catalyzes the reaction [12]. Metabolic networks provide a framework for integrating genomic, transcriptomic, proteomic, and metabolomic data, as they connect gene content and expression, protein abundance, and metabolite levels through well-annotated biochemical transformations.

These networks are particularly powerful for modeling metabolic fluxes in different physiological states, predicting the effects of gene knockouts, and identifying potential drug targets in metabolic diseases or pathogens. Constraint-based reconstruction and analysis (COBRA) methods leverage genome-scale metabolic models to predict metabolic behavior under different genetic and environmental conditions.

Experimental Protocol: Building Context-Specific Metabolic Models

Objective: Construct a condition-specific metabolic network by integrating metabolomic and transcriptomic data to identify metabolic vulnerabilities in cancer cells.

Workflow:

Materials and Reagents:

- Reference Metabolic Reconstruction: Human-GEM or Recon3D as base model

- Transcriptomic Data: RNA-seq data from cancer and normal cells

- Metabolomic Data: LC-MS/MS based quantification of metabolites

- COBRA Toolbox: MATLAB-based toolbox for constraint-based modeling

- Flux Analysis Software: COBRApy (Python implementation)

Procedure:

- Base Model Preparation: Download a comprehensive metabolic reconstruction such as Human-GEM, which contains thousands of metabolic reactions and metabolites.

- Data Integration: Integrate transcriptomic data to define reaction constraints. Reactions associated with non-expressed genes may be constrained to zero flux.

- Gap Filling: Identify and fill gaps in the network that would prevent essential metabolic functions, using the modelEC and fillGaps functions.

- Context-Specific Model Extraction: Generate a condition-specific model using algorithms like FASTCORE, which extracts a functional subnetwork consistent with expression data.

- Constraint Definition: Incorporate metabolomic data to define additional constraints, such as ATP maintenance requirements or nutrient uptake rates.

- Flux Balance Analysis: Perform FBA to predict metabolic fluxes by optimizing an objective function (e.g., biomass production for cancer cells).

- Essentiality Analysis: Simulate gene knockouts to identify essential reactions whose disruption would inhibit cell growth or viability.

- Validation: Compare predicted essential genes with siRNA or CRISPR screening data to validate model predictions.

Advanced Applications:

- Integrate with drug databases to identify inhibitors of essential metabolic enzymes

- Compare flux distributions between conditions to identify metabolic reprogramming

- Combine with structural systems biology to predict drug binding to metabolic enzymes

Multi-omics Integration Strategies

Computational Integration Approaches

Integrating multiple biological networks presents both conceptual and computational challenges. The main integration strategies can be categorized based on whether the data originates from the same cells (matched) or different cells (unmatched) [3]. Matched integration, or vertical integration, leverages the cell itself as an anchor to bring different omics modalities together [3]. Unmatched integration, or diagonal integration, requires more sophisticated computational methods to project cells from different modalities into a shared space where commonality can be found [3].

Table 3: Multi-omics Integration Tools and Methods

| Tool Name | Integration Type | Methodology | Compatible Data Types |

|---|---|---|---|

| Seurat v4 | Matched | Weighted nearest-neighbors | mRNA, spatial coordinates, protein, chromatin accessibility [3] |

| MOFA+ | Matched | Factor analysis | mRNA, DNA methylation, chromatin accessibility [3] |

| GLUE | Unmatched | Graph variational autoencoders | Chromatin accessibility, DNA methylation, mRNA [3] |

| LIGER | Unmatched | Integrative non-negative matrix factorization | mRNA, DNA methylation [3] |

| StabMap | Mosaic | Mosaic data integration | mRNA, chromatin accessibility [3] |

Integrated Protocol: Cross-Network Analysis

Objective: Perform integrated analysis across PPI, GRN, and metabolic networks to identify master regulators and their functional targets in a disease process.

Workflow:

Procedure:

- Individual Network Construction: Build high-quality PPI, GRN, and metabolic networks using the protocols described in previous sections.

- Regulator Identification: From the GRN, identify master regulator transcription factors showing significant changes in regulatory activity between conditions.

- Protein Complex Mapping: Map these regulators in the PPI network to identify their direct interaction partners and potential complexes they participate in.

- Metabolic Impact Assessment: For regulators that are metabolic enzymes or regulate metabolic genes, trace their impact through the metabolic network using pathway analysis.

- Cross-Network Prioritization: Develop a scoring system that integrates:

- Regulatory out-degree from GRN

- Protein interaction degree from PPI

- Metabolic impact score from metabolic network

- Experimental Design: Design multi-assay experiments to validate top candidates, including:

- ChIP-seq for transcription factors

- Co-immunoprecipitation for protein interactions

- Metabolomic profiling after genetic perturbation

The Scientist's Toolkit

Table 4: Essential Research Reagent Solutions for Network Biology

| Reagent/Resource | Category | Function in Network Analysis | Example Sources |

|---|---|---|---|

| BioGRID Database | Data Resource | Provides curated PPI data for network construction | https://thebiogrid.org [12] |

| Cytoscape | Software Platform | Network visualization and analysis | Cytoscape Consortium [13] |

| JASPAR Database | Data Resource | TF binding motifs for GRN construction | http://jaspar.genereg.net [11] |

| KEGG Pathway | Data Resource | Metabolic pathway data for network building | https://www.genome.jp/kegg/ [12] |

| Human-GEM | Metabolic Model | Genome-scale metabolic reconstruction | https://github.com/SysBioChalmers/Human-GEM |

| SCENIC+ | Software Tool | GRN inference from multi-omics data | https://github.com/aertslab/SCENICplus [3] |

| COBRA Toolbox | Software Tool | Constraint-based metabolic flux analysis | https://opencobra.github.io/cobratoolbox/ |

| String Database | Data Resource | Functional protein association networks | https://string-db.org [11] |

| Senecionine N-oxide-D3 | Senecionine N-oxide-D3, MF:C18H25NO6, MW:354.4 g/mol | Chemical Reagent | Bench Chemicals |

| Chloranthalactone E | Chloranthalactone E | Chloranthalactone E is a labdane diterpene for research. It inhibits NO production, supporting inflammation studies. For Research Use Only. Not for human consumption. | Bench Chemicals |

Biological networks provide an essential framework for multi-omics integration in systems biology research. PPI networks, GRNs, and metabolic networks each capture different aspects of biological organization, and their integrated analysis enables researchers to move from descriptive lists of molecules to mechanistic models of cellular behavior. The protocols and applications outlined in this article provide a roadmap for constructing, analyzing, and integrating these networks to extract biological insights and generate testable hypotheses. As multi-omics technologies continue to advance, network-based approaches will play an increasingly important role in translating complex datasets into meaningful biological discoveries and therapeutic interventions.

The advent of large-scale molecular profiling has fundamentally transformed cancer research, enabling a shift from isolated biological investigations to comprehensive, systems-level analyses. Multi-omics approaches integrate diverse biological data layers—including genomics, transcriptomics, epigenomics, proteomics, and metabolomics—to construct holistic models of tumor biology. This paradigm requires access to standardized, high-quality data from coordinated international efforts. Three repositories form the cornerstone of contemporary cancer multi-omics research: The Cancer Genome Atlas (TCGA), Clinical Proteomic Tumor Analysis Consortium (CPTAC), and the International Cancer Genome Consortium (ICGC), now evolved into the ICGC ARGO platform. These resources provide the foundational data driving discoveries in molecular subtyping, biomarker identification, and therapeutic target discovery [14]. For researchers in systems biology, understanding the scope, structure, and access protocols of these repositories is a critical first step in designing robust multi-omics integration strategies. This document provides detailed application notes and experimental protocols for leveraging these key resources within a thesis framework focused on multi-omics data integration.

The landscape of public cancer multi-omics data is dominated by several major initiatives, each with distinct biological emphases, scales, and data architectures. TCGA, a landmark project jointly managed by the National Cancer Institute (NCI) and the National Human Genome Research Institute (NHGRI), established the modern standard for comprehensive tumor molecular characterization. It molecularly characterized over 20,000 primary cancer and matched normal samples spanning 33 cancer types, generating over 2.5 petabytes of genomic, epigenomic, transcriptomic, and proteomic data [15]. The ICGC ARGO represents the next evolutionary phase, designed to uniformly analyze specimens from 100,000 cancer patients with high-quality, curated clinical data to address outstanding questions in oncology. As of its recent Data Release 13, the ARGO platform includes data from 5,528 donors, with over 63,000 donors committed representing 20 tumour types [16]. While CPTAC is mentioned as a key resource in the literature [17] [18], specific quantitative details regarding its current data volume were not available in the provided search results.

Table 1: Key Multi-Omics Data Repositories at a Glance

| Repository | Primary Focus | Sample Scale | Key Omics Types | Primary Access Portal |

|---|---|---|---|---|

| TCGA | Pan-cancer molecular atlas of primary tumors | >20,000 primary cancer and matched normal samples [15] | Genomics, Epigenomics, Transcriptomics, Proteomics [15] | Genomic Data Commons (GDC) Data Portal [15] |

| ICGC ARGO | Translating genomic knowledge into clinical impact; high-quality clinical correlation | 5,528 donors (current release); 63,116 committed donors [16] | Genomic, Transcriptomic (analyzed against GRCh38) [16] | ICGC ARGO Data Platform [16] |

| CPTAC | Proteogenomic characterization; protein-level analysis | Not specified in results | Proteomics, Genomics, Transcriptomics [17] [18] | Not specified in results |

The repositories complement each other in their scientific emphasis. TCGA provides the foundational pan-cancer molecular map, while ICGC ARGO emphasizes clinical applicability and longitudinal data. CPTAC contributes deep proteogenomic integration, connecting genetic alterations to their functional protein-level consequences. Together, they enable researchers to move from correlation to causation in cancer biology.

Data Types and Molecular Features

Understanding the nature and limitations of each omics data type is crucial for effective integration. Each layer provides a distinct yet interconnected view of the tumor's biological state, and their integration can reveal complex mechanisms driving oncogenesis.

Table 2: Multi-Omics Data Types: Descriptions, Applications, and Considerations

| Omics Component | Description | Pros | Cons | Key Applications in Cancer Research |

|---|---|---|---|---|

| Genomics | Study of the complete set of DNA, including all genes, focusing on sequence, structure, and variation. | - Comprehensive view of genetic variation.- Identifies driver mutations, SNPs, CNVs.- Foundation for personalized medicine. | - Does not account for gene expression or regulation.- Large data volume and complexity.- Ethical concerns regarding genetic data. | - Disease risk assessment.- Identification of driver mutations.- Pharmacogenomics. |

| Transcriptomics | Analysis of RNA transcripts produced by the genome under specific conditions. | - Captures dynamic gene expression changes.- Reveals regulatory mechanisms.- Aids in understanding disease pathways. | - RNA is less stable than DNA.- Provides a snapshot, not long-term view.- Requires complex bioinformatics tools. | - Gene expression profiling.- Biomarker discovery.- Drug response studies. |

| Epigenomics | Study of heritable changes in gene expression not involving changes to the underlying DNA sequence (e.g., methylation). | - Explains regulation beyond DNA sequence.- Connects environment and gene expression.- Identifies potential drug targets. | - Changes are tissue-specific and dynamic.- Complex data interpretation.- Influenced by external factors. | - Cancer research (e.g., promoter methylation).- Developmental biology.- Environmental impact studies. |

| Proteomics | Study of the structure, function, and quantity of proteins, the main functional products of genes. | - Directly measures protein levels and modifications (e.g., phosphorylation).- Links genotype to phenotype. | - Proteins have complex structures and vast dynamic ranges.- Proteome is much larger than genome.- Difficult quantification and standardization. | - Biomarker discovery.- Drug target identification.- Functional studies of cellular processes. |

| Metabolomics | Comprehensive analysis of metabolites within a biological sample, reflecting the biochemical activity and state. | - Provides insight into metabolic pathways.- Direct link to phenotype.- Can capture real-time physiological status. | - Metabolome is highly dynamic.- Limited reference databases.- Technical variability and sensitivity issues. | - Disease diagnosis.- Nutritional studies.- Toxicology and drug metabolism. |

The true power of a multi-omics approach lies in data integration. For example, CNVs identified through genomics (such as HER2 amplification) can be correlated with transcriptomic overexpression and protein-level measurements, providing a coherent mechanistic story from DNA to functional consequence [14]. Similarly, epigenomic silencing of tumor suppressor genes via promoter methylation can be linked to reduced transcript and protein levels, revealing an alternative pathway to functional inactivation beyond mutation.

Experimental Protocols for Data Access and Preprocessing

Protocol 1: Accessing and Processing TCGA Data via the GDC Portal

Application Note: This protocol is optimized for researchers building machine learning models for pan-cancer classification or subtype discovery, leveraging the standardized MLOmics processing pipeline [19].

Data Access:

- Navigate to the Genomic Data Commons (GDC) Data Portal.

- Use the "Repository" tab to filter by program:

TCGA. - Select cases based on

project.program.nameand specificproject.project_idcorresponding to desired cancer types (e.g., TCGA-BRCA for breast cancer). - Under the "Files" tab, apply filters for

data_category(e.g., "Transcriptome Profiling"),data_type(e.g., "Gene Expression Quantification"), andexperimental_strategy(e.g., "RNA-Seq"). Download the manifest file and use the GDC Data Transfer Tool for bulk download.

Data Preprocessing for Transcriptomics (mRNA/miRNA):

- Identification: Trace data using the

experimental_strategyfield in metadata, marked as “mRNA-Seq†or “miRNA-Seqâ€. Verifydata_categoryis “Transcriptome Profilingâ€. - Platform Determination: Identify the experimental platform from metadata (e.g., “Illumina Hi-Seqâ€).

- Conversion: For RNA-Seq data, use the

edgeRpackage to convert scaled gene-level RSEM estimates into FPKM values [19]. - Filtering: Remove non-human miRNA expressions using annotations from miRBase. Eliminate features with zero expression in >10% of samples or undefined values (N/A).

- Transformation: Apply a logarithmic transformation (log2(FPKM+1)) to normalize the data distribution.

- Identification: Trace data using the

Data Preprocessing for Genomics (CNV):

- Identification: Examine metadata for key descriptions like “Calls made after normal contamination correction and CNV removal using thresholds†to identify CNV alteration files.

- Filtering: Retain only somatic variants marked as “somatic†and filter out germline mutations.

- Annotation: Use the

BiomaRtpackage to annotate recurrent aberrant genomic regions with gene information [19].

Data Preprocessing for Epigenomics (DNA Methylation):

- Region Identification: Map methylation regions to genes using metadata descriptions (e.g., “Average methylation beta-values of promotersâ€).

- Normalization: Perform median-centering normalization using the

limmaR package to adjust for technical biases [19]. - Promoter Selection: For genes with multiple promoters, select the promoter with the lowest methylation levels in normal tissues as the representative feature.

Dataset Construction for Machine Learning:

- Feature Alignment: Resolve gene naming format mismatches (e.g., between different reference genomes) and identify the intersection of features present across all selected cancer types. Apply z-score normalization.

- Feature Selection (Optional): For high-dimensional data, perform multi-class ANOVA to identify genes with significant variance across cancer types. Apply Benjamini-Hochberg (BH) correction to control the False Discovery Rate (FDR) and rank features by adjusted p-values (e.g., p < 0.05). Follow with z-score normalization [19].

Protocol 2: Accessing ICGC ARGO Data for Clinically-Annotated Analysis

Application Note: This protocol outlines the process for accessing the rich clinical and genomic data available through the ICGC ARGO platform, which is essential for studies linking molecular profiles to patient outcomes [16].

Registration and Data Access Application:

- Navigate to the ICGC ARGO Data Platform website.

- Register for an account and complete the Data Access Compliance process as required. This often involves submitting a research proposal for approval by the Data Access Committee.

- Once approved, log in to the Data Platform to browse and query available data.

Data Browsing and Filtering:

- Use the platform's interactive interface to browse clinical data and molecular data analyzed against the GRCh38 reference genome.

- Filter donors/datasets by

tumour type,country of origin,donor age,clinical stage,treatment history, andvital statusto build a cohort matching your research question. - Review the available omics data types (e.g., WGS, RNA-Seq) for the selected cohort.

Data Download and Integration:

- Select the desired donor samples and associated molecular data files.

- Download the data using the provided tools or links. Note that data may be available in different formats (e.g., VCF, BAM, FASTQ).

- Integrate clinical metadata (e.g., survival, treatment response) with the molecular data using the provided donor and sample IDs for downstream analysis.

Protocol 3: A Generalized Framework for Multi-Omics Study Design (MOSD)

Application Note: Based on a comprehensive review of multi-omics integration challenges, this protocol provides evidence-based guidelines for designing a robust multi-omics study, ensuring reliable and reproducible results [17].

Define Computational Factors:

- Sample Size: Ensure a minimum of 26 samples per class/group to achieve robust clustering performance in subtype discrimination [17].

- Feature Selection: Apply feature selection to reduce dimensionality. Selecting less than 10% of omics features has been shown to improve clustering performance by up to 34% by filtering out non-informative variables [17].

- Class Balance: Maintain a sample balance between classes under a 3:1 ratio (e.g., Class A vs. Class B) to prevent model bias towards the majority class.

- Noise Characterization: Assess and control data quality, as clustering performance can significantly degrade when the noise level exceeds 30% [17].

Define Biological Factors:

- Omics Combination: Strategically select omics layers. A review of 11 combinations from four omics types (GE, MI, ME, CNV) suggests that optimal configurations are task-dependent and should be informed by the biological question [17] [14].

- Clinical Feature Correlation: Plan to correlate molecular findings with available clinical annotations (e.g., molecular subtypes, pathological stage, survival data) to validate the biological and clinical relevance of the analysis [17].

Visualization of Multi-Omics Data Integration Workflow

The following diagram illustrates a standardized workflow for accessing, processing, and integrating multi-omics data from major repositories, culminating in downstream systems biology applications.

The Scientist's Toolkit: Essential Research Reagents and Computational Tools

Success in multi-omics research relies on a suite of computational tools and resources for data retrieval, processing, and analysis. The following table details key solutions mentioned in the current literature.

Table 3: Essential Computational Tools for Multi-Omics Research

| Tool/Resource | Type | Primary Function | Application Note |

|---|---|---|---|

| Gencube | Command-line tool | Centralized retrieval and integration of multi-omics resources (genome assemblies, gene sets, annotations, sequences, NGS data) from leading databases [20]. | Streamlines data acquisition from disparate sources, saving significant time in the data gathering phase of a project. It is free and open-source. |

| MLOmics | Processed Database & Pipeline | Provides off-the-shelf, ML-ready multi-omics datasets from TCGA, including pan-cancer and gold-standard subtype classification datasets [19]. | Ideal for machine learning practitioners, as it bypasses laborious TCGA preprocessing. Includes precomputed baselines (XGBoost, SVM, CustOmics) for fair model comparison. |

| edgeR | R Package | Conversion of RNA-Seq counts (e.g., RSEM) to normalized expression values (FPKM) and differential expression analysis [19]. | A cornerstone for transcriptomics preprocessing, particularly for TCGA data. Critical for preparing gene expression matrices for downstream integration. |

| limma | R Package | Normalization and analysis of microarray and RNA-Seq data, including methylation array normalization [19]. | Provides robust methods for normalizing data like DNA methylation beta-values, correcting for technical variation across samples. |

| BiomaRt | R Package | Annotation of genomic regions (e.g., CNV segments, gene promoters) with unified gene IDs and biological metadata [19]. | Resolves variations in gene naming conventions, ensuring features can be aligned across different omics layers. |

| GAIA | Computational Tool | Identification of recurrent genomic alterations (e.g., CNVs) in cancer genomes from segmentation data [19]. | Used to pinpoint genomic regions that are significantly aberrant across a cohort, highlighting potential driver events. |

| ICGC ARGO Platform | Data Platform | Web-based platform for browsing, accessing, and analyzing clinically annotated genomic data from the ICGC ARGO project [16]. | The primary portal for accessing the next generation of ICGC data, which emphasizes clinical outcome correlation. Requires a data access application. |

| Ethoxylated methyl glucoside dioleate | Ethoxylated methyl glucoside dioleate, CAS:86893-19-8, MF:C136H158N26O31, MW:2652.9 g/mol | Chemical Reagent | Bench Chemicals |

| 24-Methylenecholesterol-13C | 24-Methylenecholesterol-13C, MF:C28H46O, MW:399.7 g/mol | Chemical Reagent | Bench Chemicals |

Methodologies in Action: Computational Strategies for Integrating Multi-Omics Data

In systems biology, a holistic understanding of complex phenotypes requires the integrated investigation of the contributions and associations between multiple molecular layers, such as the genome, transcriptome, proteome, and metabolome [21]. Network-based integration methods provide a powerful framework for multi-omics data by representing complex molecular interactions as graphs, where nodes represent biological entities and edges represent their interactions [21] [22]. These approaches allow researchers to move beyond single-omics investigations and elucidate the functional connections and modules that carry out biological processes [21]. Among the various computational strategies, three core classes of methods have emerged as particularly impactful: network propagation models, similarity-based approaches, and network inference models. These methodologies have revolutionized multi-omics analysis by enabling the identification of biomarkers, disease subtypes, molecular drivers of disease, and novel therapeutic targets [21] [22]. This application note provides detailed protocols and frameworks for implementing these network-based integration methods within multi-omics research, with a specific focus on applications in drug discovery and clinical outcome prediction.

Core Methodologies and Theoretical Frameworks

Network Propagation Models

Network propagation, also known as network smoothing, is a class of algorithms that integrate information from input data across connected nodes in a given molecular network [23]. These methods are founded on the hypothesis that node proximity within a network is a measure of their relatedness and contribution to biological processes [21]. Propagation algorithms amplify feature associations by allowing node scores to spread along network edges, thereby emphasizing network regions enriched for perturbed molecules [23].

Table 1: Key Network Propagation Algorithms and Parameters

| Algorithm | Mathematical Formulation | Key Parameters | Convergence Behavior |

|---|---|---|---|

| Random Walk with Restart (RWR) | ( Fi = (1-\alpha)F0 + \alpha WF_{i-1} ) | Restart probability ((1-\alpha)), Convergence threshold | Small α: stays close to initial scores; Large α: stronger neighbor influence [23] |

| Heat Diffusion (HD) | ( Ft = \exp(-Wt)F0 ) | Diffusion time (t) | t=0: no propagation; t→∞: dominated by network topology [23] |

| Network Normalization | Laplacian: ( W_L = D - A ); Normalized Laplacian; Degree-normalized adjacency matrix | Normalization method choice | Critical to avoid "topology bias" where results are unduly influenced by network structure [23] |

The propagation process requires omics data mapped onto predefined molecular networks, which can be obtained from public databases such as STRING or BioGRID [23] [24]. The initial node scores ((F_0)) typically represent molecular measurements such as fold changes of transcripts or protein abundance [23]. The normalized network matrix ((W)) determines how information flows through the network during propagation.

Similarity-Based Integration Approaches

Similarity-based methods quantify relationships between biological entities by measuring the similarity of their interaction profiles or omics measurements. These approaches operationalize the "guilt-by-association" principle, which posits that genes with similar interaction profiles likely share similar functions [25].

Table 2: Association Indices for Measuring Interaction Profile Similarity

| Index | Formula | Range | Key Considerations | ||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Jaccard | ( J_{AB} = \frac{ | N(A) \cap N(B) | }{ | N(A) \cup N(B) | } ) | 0-1 | Cannot discriminate between different edge distributions with same union size [25] | ||||||||||

| Simpson | ( S_{AB} = \frac{ | N(A) \cap N(B) | }{\min( | N(A) | , | N(B) | )} ) | 0-1 | Sensitive to the least connected node; may overestimate similarity [25] | ||||||||

| Cosine | ( C_{AB} = \frac{ | N(A) \cap N(B) | }{\sqrt{ | N(A) | \cdot | N(B) | }} ) | 0-1 | Geometric mean of proportions; widely used in high-dimensional spaces [25] | ||||||||

| Pearson Correlation | ( PCC_{AB} = \frac{ | N(A) \cap N(B) | \cdot n_y - | N(A) | \cdot | N(B) | }{\sqrt{ | N(A) | \cdot | N(B) | \cdot (n_y - | N(A) | ) \cdot (n_y - | N(B) | )}} ) | -1 to 1 | Accounts for network size; 0 indicates expected overlap by chance [25] |

| Connection Specificity Index (CSI) | ( CSI{AB} = 1 - \frac{#\text{nodes with PCC} \geq PCC{AB} - 0.05}{n_y} ) | Context-dependent | Mitigates hub effects by ranking similarity significance [25] |

In Patient Similarity Networks (PSN), these indices are adapted to compute distances among patients from omics features, creating graphs where patients are nodes and similarities between their omics profiles are edges [26]. For two patients (u) and (v) with omics measurements (\phi^mu) and (\phi^mv) for omics type (m), the similarity is computed as (a^m{u,v} = \text{sim}(\phi^mu, \phi^m_v)), where (\text{sim}) is a similarity measure such as Pearson's correlation coefficient [26].

Network Inference Models

Network inference methods aim to reconstruct molecular networks from omics data, identifying potential regulatory relationships and interactions that may not be present in existing knowledge bases. These methods can be broadly categorized into data-driven and knowledge-driven approaches [24].

Data-driven network reconstruction employs statistical and computational approaches to infer relationships directly from omics data. For gene expression data, methods like ARACNe (Algorithm for the Reconstruction of Accurate Cellular Networks) analyze co-expression patterns to identify most likely transcription factor-target gene interactions by estimating mutual information between pairs of transcript expression profiles [24]. Knowledge-driven approaches incorporate experimentally determined interactions from specialized databases such as BioGRID for protein-protein interactions or KEGG for metabolic pathways [24]. Hybrid approaches combine both strategies to build more comprehensive networks [24].

Diagram 1: Network-based multi-omics integration workflow illustrating the interplay between data sources, methodologies, and outputs.

Application Protocols

Protocol 1: Multi-Omics Clinical Outcome Prediction Using Patient Similarity Networks

This protocol details the implementation of network-based integration for predicting clinical outcomes in neuroblastoma, adaptable to other disease contexts [26].

Materials and Reagents:

- Multi-omics datasets (e.g., transcriptomics, epigenomics, proteomics)

- Computational environment (R or Python)

- Normalized count tables for each omics type

Procedure:

Data Preprocessing

- For each omics dataset, filter low-count features and normalize using platform-specific methods

- Retain molecules with highest expression fold change across the time course to focus on most variable features [24]

Patient Similarity Network Construction

- For each omics type (m), compute patient similarity matrix using Pearson's correlation coefficient: [ a^m{u,v} = \frac{N(\sumi \phi^m{u,i} \phi^m{v,i}) - \sumi \phi^m{u,i} \sumi \phi^m{v,i}}{\sqrt{(N\sumi (\phi^m{u,i})^2 - (\sumi \phi^m{u,i})^2)(N\sumi (\phi^m{v,i})^2 - (\sumi \phi^m{v,i})^2)}} ] where (N) is the total feature number and (i) refers to the (i^{th}) feature [26]

- Normalize and rescale correlation values using WGCNA algorithm to enforce scale-freeness of PSN [26]

Network Feature Extraction

- Compute 12 centrality features for each node: weighted degree, closeness centrality, current-flow closeness centrality, current-flow betweenness centrality, eigen vector centrality, Katz centrality, hits centrality (authority and hub values), page-rank centrality, load centrality, local clustering coefficient, iterative weighted degree, and iterative local clustering coefficient [26]

- Extract modularity features using spectral clustering and Stochastic Block Model clustering, determining optimal module number by silhouette score [26]

- Represent modular memberships as one-hot vectors and sum across modules to create modular feature vectors

- Concatenate centrality and modular features to form comprehensive network features

Data Integration and Model Training

- Implement two fusion strategies:

- Train Deep Neural Network or Machine Learning classifiers with Recursive Feature Elimination using extracted network features

- Compare performance between fusion strategies; network-level fusion generally outperforms for integrating different omics types [26]

Protocol 2: Target Identification and Prioritization Using Network Propagation

This protocol applies network propagation to identify and prioritize disease-associated genes for therapeutic targeting [23] [22].

Materials and Reagents:

- Omics data with phenotypic associations (e.g., differentially expressed genes in disease)

- Molecular interaction network (e.g., protein-protein interaction network from STRING or BioGRID)

- Computational tools for network propagation (R/Bioconductor packages or custom scripts)

Procedure:

Data Preparation

- Format initial node scores ((F_0)) as a vector where each element represents the association of a gene with the phenotype of interest (e.g., fold-change, p-value)

- Obtain relevant molecular network and normalize using appropriate method (Laplacian, normalized Laplacian, or degree-normalized adjacency matrix) [23]

Parameter Optimization

- Optimize propagation parameters using one of two strategies:

- Replicate consistency: Maximize consistency between biological replicates within a dataset

- Cross-omics agreement: Maximize consistency between different omics types (e.g., transcriptomics and proteomics) [23]

- For RWR, test α values between 0.1-0.9; for Heat Diffusion, test t values that maintain biological signal

- Optimize propagation parameters using one of two strategies:

Network Propagation

Target Identification and Validation

- Rank genes by propagated scores, prioritizing those with highest scores

- Identify network modules enriched for high-scoring genes using clustering algorithms

- Validate candidate targets through:

Diagram 2: Clinical outcome prediction workflow using network-based multi-omics integration.

Table 3: Key Research Resources for Network-Based Multi-Omics Integration

| Resource Category | Specific Tools/Databases | Function and Application |

|---|---|---|

| Molecular Interaction Databases | STRING, BioGRID, KEGG Pathway | Provide curated molecular interactions for network construction; BioGRID records protein-protein and genetic interactions; KEGG provides metabolic pathways [23] [24] |

| Network Analysis Tools | ARACNe, netOmics R package, WGCNA | ARACNe infers gene regulatory networks; netOmics facilitates multi-omics network exploration; WGCNA enables weighted correlation network analysis [24] [26] |

| Propagation Algorithms | Random Walk with Restart (RWR), Heat Diffusion | Implement network propagation/smoothing to amplify disease-associated regions in molecular networks [21] [23] |

| Similarity Metrics | Jaccard, Simpson, Cosine, Pearson Correlation | Quantify interaction profile similarity between nodes for guilt-by-association analysis [25] |

| Multi-Omics Integration Frameworks | Similarity Network Fusion (SNF), DIABLO, MOFA | Fuse multiple omics datasets; SNF integrates patient similarity networks; DIABLO and MOFA perform multivariate integration [26] |

Network-based integration methods represent powerful approaches for extracting meaningful biological insights from multi-omics data. Propagation models effectively amplify signals in molecular networks, similarity-based approaches operationalize guilt-by-association principles, and inference models reconstruct molecular relationships from complex data. The protocols presented here provide practical frameworks for implementing these methods in disease mechanism elucidation, clinical outcome prediction, and therapeutic target identification. As multi-omics technologies continue to advance, these network-based strategies will play an increasingly critical role in systems biology and precision medicine, particularly for addressing complex diseases where multiple molecular layers contribute to pathogenesis. Future methodological developments will need to focus on incorporating temporal and spatial dynamics, improving computational scalability for large datasets, and enhancing the biological interpretability of complex network models [22].

The integration of multi-omics data represents a powerful strategy in systems biology to unravel the complex molecular underpinnings of cancer and other diseases. Graph Neural Networks (GNNs) have emerged as a particularly effective computational framework for this task due to their innate ability to model the complex, structured relationships inherent in biological systems [28]. Unlike traditional deep learning models, GNNs operate directly on graph-structured data, making them exceptionally suited for representing and analyzing biological networks where entities like genes, proteins, and metabolites are interconnected [29] [28].

Multi-omics encompasses the holistic profiling of various molecular layers—including genomics, transcriptomics, proteomics, and metabolomics—to gain a comprehensive understanding of biological processes and disease mechanisms [2] [28]. However, integrating these heterogeneous and high-dimensional datasets poses significant challenges. GNNs address these challenges by providing a flexible architecture that can capture both the features of individual molecular entities (nodes) and the complex interactions between them (edges) [29]. This capability is crucial for identifying novel biomarkers, understanding disease progression, and advancing precision medicine, ultimately fulfilling the promise of systems biology by integrating multiple types of quantitative molecular measurements [2] [30].

Comparative Analysis of GNN Architectures

Among GNN architectures, Graph Convolutional Networks (GCNs), Graph Attention Networks (GATs), and Graph Transformer Networks (GTNs) are at the forefront of multi-omics integration research. The table below summarizes their core characteristics, mechanisms, and applications in multi-omics analysis.

Table 1: Comparison of Key GNN Architectures for Multi-Omics Integration

| Architecture | Core Mechanism | Key Advantage | Typical Multi-Omics Application |

|---|---|---|---|

| Graph Convolutional Network (GCN) | Applies convolutional operations to graph data, aggregating features from a node's immediate neighbors [29]. | Creates a localized graph representation around a node, effective for capturing local topological structures [29]. | Node classification in biological networks (e.g., PPI networks) [29]. |

| Graph Attention Network (GAT) | Incorporates an attention mechanism that assigns different weights to neighboring nodes during feature aggregation [29]. | Allows the model to focus on the most important parts of the graph, handling heterogeneous connections effectively [29]. | Integrating mRNA, miRNA, and DNA methylation data for superior cancer classification [29] [30]. |

| Graph Transformer Network (GTN) | Introduces transformer-based self-attention architectures into graph learning [29]. | Excels at capturing long-range, global dependencies within the graph structure [29]. | Graph-level prediction tasks requiring an understanding of global graph features [29]. |

Empirical evaluations demonstrate the performance of these architectures in real-world multi-omics tasks. The table below quantifies the performance of LASSO-integrated GNN models for classifying 31 cancer types and normal tissue based on different omics data combinations [29].

Table 2: Performance Comparison of LASSO-GNN Models on Multi-Omics Cancer Classification (Accuracy %) [29]

| Model | DNA Methylation Only | mRNA + DNA Methylation | mRNA + miRNA + DNA Methylation |

|---|---|---|---|

| LASSO-MOGCN | 93.72% | 94.55% | 95.11% |

| LASSO-MOGAT | 94.88% | 95.67% | 95.90% |

| LASSO-MOGTN | 94.01% | 94.98% | 95.45% |

These results highlight two critical trends. First, models integrating multiple omics data types consistently outperform models using single-omics data, underscoring the value of integrative analysis [29]. Second, the GAT architecture often achieves the highest performance, likely due to its ability to leverage attention mechanisms for optimally weighting information from diverse molecular data sources [29] [30].

Experimental Protocols for Multi-Omics GNN Analysis

Protocol 1: Multi-Omics Cancer Classification Using GAT

This protocol details the methodology for building a multi-omics cancer classifier, as validated on a dataset of 8,464 samples from 31 cancer types [29].

Step 1: Data Acquisition and Preprocessing

- Data Collection: Acquire multi-omics datasets from public repositories like The Cancer Genome Atlas (TCGA). Essential datatypes include messenger RNA (mRNA) expression, micro-RNA (miRNA) expression, and DNA methylation data [29] [30].

- Data Preprocessing: Perform rigorous data cleaning, including noise reduction, normalization, and standardization of feature values (e.g., scaling to a [0,1] interval). Filter out low-quality data exhibiting incomplete or zero expression [30].

- Feature Selection: Apply LASSO (Least Absolute Shrinkage and Selection Operator) regression for dimensionality reduction and to identify the most relevant genomic features for the classification task [29].

Step 2: Graph Structure Construction

- Construct the graph structure where nodes represent biological entities (e.g., patients, genes). Two primary methods are:

Step 3: Model Implementation and Training

- Implement the GAT model architecture. The key component is the graph attention layer, which computes hidden representations for each node by attending over its neighbors, using a self-attention mechanism [29].

- Configure the model with appropriate hyperparameters. The final layer should be a softmax classifier for the multi-class cancer type prediction.

- Train the model using a standard deep learning workflow, partitioning data into training, validation, and test sets to evaluate performance metrics such as accuracy, weighted F1-score, and macro F1-score [29] [30].

Figure 1: GAT Multi-Omics Cancer Classification Workflow

Protocol 2: The MOLUNGN Framework for Biomarker Discovery

This protocol outlines the MOLUNGN framework, designed for precise lung cancer staging and biomarker discovery [30].

Step 1: Construction of Multi-Omics Feature Matrices

- For a specific cancer type (e.g., Non-Small Cell Lung Cancer (NSCLC)), extract and preprocess mRNA expression, miRNA mutation profiles, and DNA methylation data from clinical datasets like TCGA [30].

- Create separate, refined feature matrices for each omics type. For example, refine an initial set of over 60,000 gene features down to approximately 14,500 high-quality genes through filtering and normalization [30].

Step 2: Implementation of Omics-Specific GAT (OSGAT)

- Employ separate, dedicated GAT modules for each omics data type (e.g., one for mRNA, one for miRNA). These Omics-Specific GAT (OSGAT) modules are responsible for learning complex intra-omics correlations and feature interactions within each molecular layer [30].

Step 3: Multi-Omics Integration and Correlation Discovery

- Integrate the learned representations from all OSGAT modules using a Multi-Omics View Correlation Discovery Network (MOVCDN). This higher-level network operates in a shared label space to effectively capture and model the inter-omics correlations between different molecular data views [30].

- Use the integrated model for downstream tasks: classification of clinical cases into precise cancer stages (e.g., TNM staging) and extraction of stage-specific biomarkers through analysis of the model's attention weights and feature importances [30].

Figure 2: MOLUNGN Framework for Biomarker Discovery

Successful implementation of multi-omics GNN studies requires a suite of computational tools and data resources. The table below catalogues the essential "research reagents" for this field.

Table 3: Essential Computational Tools & Resources for Multi-Omics GNN Research

| Resource Name | Type/Function | Brief Description & Application |

|---|---|---|

| TCGA (The Cancer Genome Atlas) | Data Repository | A foundational public database containing molecular profiles from thousands of patient samples across multiple cancer types, providing essential input data (mRNA, miRNA, methylation) for model training and validation [29] [30]. |

| PPI Networks | Knowledge Database | Protein-Protein Interaction networks (e.g., from STRINGdb) serve as prior biological knowledge to construct meaningful graph structures, modeling known interactions between biological entities [29]. |

| LASSO Regression | Computational Tool | A feature selection algorithm used to reduce the high dimensionality of omics data, identifying the most predictive features and improving model efficiency and performance [29]. |

| Seurat | Software Tool | A comprehensive R toolkit widely used for single-cell and multi-omics data analysis, including data preprocessing, integration, and visualization [3]. |

| MOFA+ | Software Tool | A factor analysis-based tool for the integrative analysis of multi-omics datasets, useful for uncovering latent factors that drive biological and technical variation across data modalities [3]. |

| GLUE | Software Tool | A variational autoencoder-based method designed for multi-omics integration, capable of achieving triple-omic integration by using prior biological knowledge to anchor features [3]. |

GNN architectures like GCN, GAT, and GTN provide a powerful and flexible framework for tackling the inherent challenges of multi-omics data integration in systems biology. Through their ability to model complex, structured biological relationships, they enable more accurate disease classification, biomarker discovery, and a deeper understanding of molecular mechanisms driving cancer progression. The continued development and application of these models, guided by robust experimental protocols and leveraging essential computational resources, are poised to significantly advance the goals of precision medicine and integrative biological research.

The comprehensive understanding of human health and diseases requires the interpretation of molecular intricacy and variations at multiple levels, including the genome, epigenome, transcriptome, proteome, and metabolome [31]. Multi-omics data integration has revolutionized the field of medicine and biology by creating avenues for integrated system-level approaches that can bridge the gap from genotype to phenotype [31]. The fundamental challenge in systems biology research lies in selecting an appropriate integration strategy that can effectively combine these complementary biological layers to reveal meaningful insights into complex biological systems.

Integration strategies are broadly classified into two philosophical approaches: simultaneous (vertical) integration and sequential (horizontal) integration. Simultaneous integration, also known as vertical integration, merges data from different omics within the same set of samples simultaneously, essentially leveraging the cell itself as an anchor to bring these omics together [3] [32]. This approach analyzes multiple data sets in a parallel fashion, treating all omics layers as equally important in the analysis [31]. In contrast, sequential integration, often called horizontal integration, involves the merging of the same omic type across multiple datasets or the step-wise analysis of multiple omics types where the output from one analysis becomes the input for the next [3] [32]. This approach frequently follows known biological relationships, such as the central dogma of molecular biology, which describes the flow of information from DNA to RNA to protein [32].

The selection between simultaneous and sequential integration frameworks depends heavily on the research objectives, the nature of the available data, and the specific biological questions being addressed. Simultaneous integration excels in discovering novel patterns and relationships across omics layers without prior biological assumptions, making it ideal for exploratory research and disease subtyping [31] [33]. Sequential integration leverages established biological hierarchies to build more interpretable models, making it particularly valuable for validating biological hypotheses and understanding causal relationships in drug development pipelines [32] [34].

Simultaneous Integration Frameworks

Conceptual Foundation and Applications

Simultaneous integration frameworks are designed to analyze multiple omics datasets in parallel, treating all data types as equally important contributors to the biological understanding. These methods integrate different omics layers—such as genomics, transcriptomics, proteomics, and metabolomics—without imposing predefined hierarchical relationships between them [31]. The core principle behind simultaneous integration is that complementary information from different molecular layers can reveal system-level patterns that would remain hidden when analyzing each layer independently [31] [33].

These approaches are particularly valuable for identifying coherent biological signatures across multiple molecular levels, enabling researchers to discover novel biomarkers, identify disease subtypes, and understand complex interactions between different regulatory layers [33]. For instance, in cancer research, simultaneous integration of genomic, transcriptomic, and proteomic data has revealed molecular subtypes that transcend single-omics classifications, leading to more precise diagnostic categories and personalized treatment strategies [31]. These frameworks have proven essential for studying multifactorial diseases where interactions between genetic predispositions, epigenetic modifications, and environmental influences create complex disease phenotypes that cannot be understood through single-omics approaches alone [34].

Methodological Approaches

Matrix Factorization Techniques

Matrix factorization methods represent a powerful family of algorithms for simultaneous data integration. These methods project variations among datasets onto a dimension-reduced space, identifying shared patterns across different omics types [33]. Key implementations include:

Joint Non-negative Matrix Factorization (jNMF): This method decomposes non-negative matrices from multiple omics datasets into common factors and loading matrices, enabling the detection of coherent patterns across data types by examining elements with significant z-scores [33]. jNMF requires proper normalization of input datasets as they often have different distributions and variability.

iCluster and iCluster+: These approaches assume a regularized joint latent variable model without non-negative constraints. iCluster+ expands on iCluster by accommodating diverse data types including binary, continuous, categorical, and count data with different modeling assumptions [33]. LASSO penalty is introduced to address sparsity issues in the loading matrix.

Joint and Individual Variation Explained (JIVE): This method decomposes original data from each layer into three components: joint variation across data types, structured variation specific to each data type, and residual noise [33]. The factorization is based on PCA principles, though this makes it sensitive to outliers.

Correlation-Based Methods

Canonical Correlation Analysis (CCA) and Partial Least Squares (PLS) represent another important category of simultaneous integration methods: