Hyperparameter Tuning for Deep Learning in ASD Diagnosis: Enhancing Accuracy and Clinical Translation

This article provides a comprehensive analysis of hyperparameter optimization strategies for deep learning models in Autism Spectrum Disorder (ASD) diagnosis.

Hyperparameter Tuning for Deep Learning in ASD Diagnosis: Enhancing Accuracy and Clinical Translation

Abstract

This article provides a comprehensive analysis of hyperparameter optimization strategies for deep learning models in Autism Spectrum Disorder (ASD) diagnosis. Tailored for researchers and biomedical professionals, it explores foundational concepts, advanced methodological applications, and optimization techniques for models like Transformers, DNNs, and LSTMs. The content covers troubleshooting common pitfalls, comparative performance validation against traditional machine learning, and the critical role of Explainable AI (XAI) for clinical trust. By synthesizing recent advances, this guide aims to bridge the gap between computational research and practical, reliable diagnostic tools for early ASD detection and intervention.

The Foundation of Deep Learning and Hyperparameter Tuning in ASD Diagnosis

Frequently Asked Questions (FAQs)

Q1: What are the core diagnostic challenges in Autism Spectrum Disorder (ASD) that computational approaches aim to solve?

ASD diagnosis faces several core challenges that create a need for computational solutions. The condition is characterized by heterogeneous symptomology, severity, and phenotypes, all defined by core symptoms of social communication deficits and restricted, repetitive behaviors [1]. Accurate identification is complicated because ASD is often enmeshed with other neurodevelopmental and medical comorbidities, a situation now considered the rule rather than the exception [1]. Furthermore, the disorder presents with varying performance and severity of symptoms over time, including unexpected loss of early skills [1]. The diagnostic process itself relies on observational methods and developmental history, as there is no medical biomarker for its presence [1].

Q2: What quantitative data highlights the urgency for improved and automated diagnostic methods?

The urgency is underscored by rapidly rising prevalence rates and disparities in identification. The table below summarizes key quantitative findings from recent surveillance data.

Table 1: Autism Spectrum Disorder (ASD) Prevalence and Identification Metrics

| Metric | Overall Figure | Key Disparities & Details |

|---|---|---|

| ASD Prevalence (8-year-olds) | 32.2 per 1,000 (1 in 31) [2] | Ranges from 9.7 (Laredo, TX) to 53.1 (California) [2]. |

| Prevalence by Sex | 3.4 times higher in boys [2] | 49.2 in boys vs. 14.3 in girls per 1,000 [2]. |

| Prevalence by Race/Ethnicity | Lower among White children [2] | White: 27.7; Asian/Pacific Islander: 38.2; American Indian/Alaska Native: 37.5; Black: 36.6; Hispanic: 33.0; Multiracial: 31.9 per 1,000 [2]. |

| Co-occurring Intellectual Disability | 39.6% of children with ASD [2] | Higher among minority groups: Black (52.8%), American Indian/Alaska Native (50.0%), Asian/Pacific Islander (43.9%), Hispanic (38.8%), White (32.7%) [2]. |

| Median Age of Diagnosis | 47 months [2] | Ranges from 36 months (CA) to 69.5 months (Laredo, TX) [2]. |

| Historical Prevalence Increase | ~300% over 20 years [3] | Driven by broadened definitions and increased awareness/screening [3]. |

Q3: What are the consequences of delayed or missed diagnosis?

Delayed or missed diagnosis can have significant, lifelong consequences. It denies individuals access to early intervention, which is critically associated with better functional outcomes in later life, including gains in cognition, language, and adaptive behavior [1]. For adults, a missed childhood diagnosis complicates identification later in life and is associated with a higher likelihood of co-occurring conditions like anxiety, depression, and other psychiatric disorders [4].

Q4: Which diagnostic instruments are most commonly used in ASD assessment?

The most common tests documented for children aged 8 years are the Autism Diagnostic Observation Schedule (ADOS-2), Autism Spectrum Rating Scales, Childhood Autism Rating Scale, Gilliam Autism Rating Scale, and Social Responsiveness Scale [2]. The ADOS-2 is considered a gold-standard assessment [4].

Troubleshooting Guides

Challenge: Navigating Comorbidities and Symptom Overlap

Problem: ASD symptoms frequently overlap with other conditions, leading to misdiagnosis or delayed diagnosis. Common co-occurring conditions include Attention-Deficit/Hyperactivity Disorder (ADHD), Developmental Language Disorder (DLD), Intellectual Disability (ID), and anxiety [1].

Solution:

- Systematic Comorbidity Screening: Actively screen for co-occurring conditions during the diagnostic process, as they can alter the presentation of core ASD symptoms [1].

- Differential Diagnosis Workflow: Implement a structured decision-making process to disentangle ASD from other conditions.

Challenge: Optimizing Hyperparameter Tuning for Diagnostic Models

Problem: Machine learning models for ASD diagnosis require careful hyperparameter tuning to maximize performance, avoid overfitting on heterogeneous data, and ensure generalizability.

Solution: Employ systematic hyperparameter optimization techniques to find the best model configuration.

Table 2: Comparison of Hyperparameter Tuning Methods

| Method | Mechanism | Best For | Advantages | Limitations |

|---|---|---|---|---|

| Grid Search [5] [6] | Brute-force search over all specified parameter combinations. | Smaller parameter spaces where exhaustive search is feasible. | Guaranteed to find the best combination within the grid. | Computationally intensive and slow for large datasets or many parameters [5]. |

| Random Search [5] [6] | Randomly samples parameter combinations from specified distributions. | Models with a small number of critical hyperparameters [6]. | Often finds good parameters faster than Grid Search [5]. | Can miss the optimal combination; efficiency depends on the random sampling. |

| Bayesian Optimization [5] [6] | Builds a probabilistic model to predict performance and chooses the next parameters intelligently. | Complex models with high-dimensional parameter spaces and expensive evaluations. | More efficient; learns from past evaluations to focus on promising areas [5] [6]. | More complex to implement; requires careful setup of the surrogate model and acquisition function. |

Experimental Protocol: Implementing Bayesian Optimization

- Define the Search Space: Specify the hyperparameters and their value ranges (e.g., learning rate:

logspace(-5, 8, 15); number of layers in a neural network:[2, 3, 4, 5]). - Choose a Performance Metric: Select the primary metric to optimize (e.g.,

accuracy,F1-score,AUC). The goal is typically to maximize this metric. - Select a Surrogate Model: Common choices include Gaussian Processes or Random Forest Regressions, which model the relationship between hyperparameters and the target metric [5].

- Iterate and Evaluate:

- The algorithm selects a set of hyperparameters based on the surrogate model.

- A model is trained and evaluated using these hyperparameters.

- The result is used to update the surrogate model.

- This process repeats for a set number of iterations or until performance converges.

- Validate Best Combination: The best-performing set of hyperparameters from the optimization should be validated on a held-out test set.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Resources for ASD Diagnostic and Hyperparameter Tuning Research

| Item / Resource | Function / Purpose | Example Use-Case |

|---|---|---|

| ADOS-2 (Autism Diagnostic Observation Schedule) [2] [4] | Gold-standard, semi-structured assessment of social interaction, communication, and play for suspected ASD. | Providing standardized behavioral metrics as ground-truth labels for training diagnostic models. |

| Bayesian Optimization Frameworks (e.g., in Amazon SageMaker) [6] | Automates the hyperparameter tuning process using a probabilistic model to find the best combination efficiently. | Accelerating the development of high-performance deep learning models for classifying ASD based on clinical or biomarker data. |

| Structured Clinical Datasets | Datasets containing comprehensive developmental history, diagnostic outcomes, and co-occurring conditions. | Training and validating models to understand the complex, multi-factorial presentation of ASD. |

| Cross-Validation [5] | A resampling technique used to assess model generalizability by partitioning data into training and validation sets multiple times. | Preventing overfitting and ensuring that a tuned model performs well on unseen data from different demographics. |

| GridSearchCV & RandomizedSearchCV (e.g., in scikit-learn) [5] | Automated tools for exhaustive (GridSearchCV) or random (RandomizedSearchCV) hyperparameter search with cross-validation. | Systematically exploring the impact of key model parameters, such as the number of trees in a random forest or the C parameter in an SVM. |

FAQs: Model Selection and Performance

Q1: What are the key performance differences between Transformer, LSTM, and DNN architectures for ASD detection?

A1: Performance varies by data type and computational constraints. The table below summarizes quantitative findings from recent studies.

Table 1: Performance Comparison of Core Deep Learning Architectures for ASD Detection

| Architecture | Data Modality | Reported Accuracy | Key Strengths | Notable Citations |

|---|---|---|---|---|

| Transformer (RoBERTa) | Social Media Text | F1-score: 99.54% (hold-out), 96.05% (external test) | Superior performance on textual data, captures complex linguistic patterns. | [7] |

| Standard DNN (MLP) | Clinical/Behavioral Traits | 96.98% Accuracy, 99.75% AUC-ROC | High accuracy on structured tabular data, efficient for non-sequential data. | [8] |

| LSTM-based (GNN-LSTM) | rs-fMRI (Dynamic Functional Connectivity) | 80.4% Accuracy (ABIDE I) | Excels at capturing temporal dynamics in time-series brain data. | [9] |

| Hybrid (CNN-BiLSTM) | rs-fMRI & Phenotypic Data | 93% Accuracy, 0.93 AUC-ROC | Combines spatial feature extraction (CNN) with temporal modeling (LSTM). | [10] |

| LSTM with BERT Embeddings | Social Media Text | F1-score: >94% (external test) | Highly competitive performance with lower computational cost than full transformers. | [7] |

Q2: My LSTM model for analyzing fMRI sequences is overfitting. What hyperparameter tuning strategies are most effective?

A2: Overfitting in LSTMs is common with complex, high-dimensional data like fMRI. Focus on these hyperparameters:

- Regularization: Implement Dropout layers between LSTM units. A common starting point is a rate of 0.2 to 0.5. L2 regularization on the kernel and recurrent weights can also be applied [9].

- Architecture Simplification: Reduce the number of LSTM units per layer or use fewer layers. A deep stack of LSTM layers is often unnecessary and prone to overfitting.

- Learning Rate: Use a lower learning rate in combination with a learning rate scheduler (e.g., reduce on plateau) to ensure stable convergence without overshooting minima [10].

Q3: When should I consider a hybrid model like CNN-LSTM over a pure Transformer or DNN for my ASD detection project?

A3: A hybrid CNN-LSTM architecture is particularly advantageous when your data possesses both spatial and temporal characteristics. For instance:

- rs-fMRI Data: CNNs can extract spatial features from brain connectivity graphs or matrices, while LSTMs subsequently model the temporal evolution of these spatial patterns across time windows [10] [11].

- Video Data (for behavior analysis): CNNs can analyze spatial features within individual frames (e.g., body posture), and LSTMs can model the progression of these postures over time to identify repetitive behaviors [12]. Use a pure DNN for static, tabular data (e.g., questionnaire scores) and a Transformer for complex, long-sequence text data.

Q4: My Transformer model requires extensive computational resources. Are there efficient alternatives for deployment in resource-constrained settings?

A4: Yes, consider these alternatives that balance performance and efficiency:

- Distilled Transformers: Use smaller, pre-trained models like DistilBERT, which retains most of BERT's performance while being faster and smaller [7].

- LSTM with Advanced Embeddings: An LSTM model augmented with pre-trained embeddings (e.g., from BERT) can achieve highly competitive performance, as shown by F1-scores exceeding 94%, with significantly lower computational demands [7].

- Optimized DNNs: For non-sequential data, a well-tuned DNN can achieve state-of-the-art results (e.g., >96% accuracy) without the overhead of sequential models [8].

Experimental Protocols & Methodologies

This section provides detailed protocols for implementing the core architectures discussed.

Protocol: Implementing a DNN for Clinical Data

This protocol is based on a study that achieved 96.98% accuracy using a Deep Neural Network (DNN) on clinical and trait data [8].

- Data Preprocessing:

- Handling Missing Values: Impute missing numerical values (e.g., Social Responsiveness Scale, Qchat-10-Score) using the mean. Impute categorical variables (e.g., Speech Delay, Sex) using the mode.

- Normalization: Standardize numerical features using Z-score normalization (mean=0, standard deviation=1).

- Encoding: Encode binary categorical variables (e.g., "ASD Traits") as 0/1. Apply one-hot encoding to multi-class variables (e.g., "Ethnicity").

- Feature Selection:

- Employ a multi-strategy approach to identify the most predictive features.

- Use LASSO regression to eliminate features with low importance.

- Use Random Forest to rank features by Gini importance.

- Combine results to select a robust feature set (e.g., Qchat10Score, ethnicity were identified as key predictors) [8].

- Model Architecture & Hyperparameters:

- Architecture: A fully connected feedforward network (Multilayer Perceptron) with an input layer, two hidden layers, and an output layer.

- Hidden Layers: Use 64 and 32 units in the first and second hidden layers, respectively, with ReLU activation functions.

- Regularization: Apply dropout (rate=0.3) after each hidden layer to prevent overfitting.

- Output Layer: Use a single unit with sigmoid activation for binary classification.

- Optimizer: Adam optimizer with a learning rate of 0.001.

- Loss Function: Binary cross-entropy.

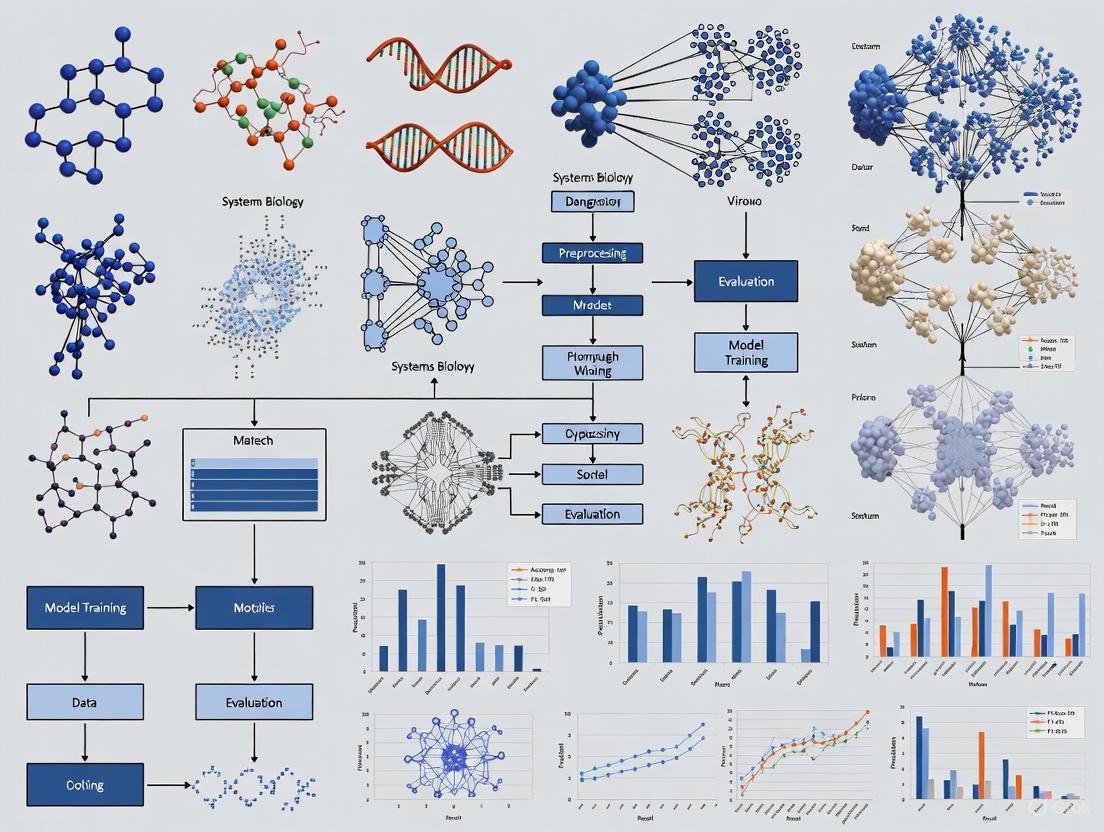

Diagram Title: DNN Experimental Workflow for Clinical Data

Protocol: Implementing a GNN-LSTM for Dynamic Functional Connectivity (rs-fMRI)

This protocol is based on a study that used a GNN-LSTM model to achieve 80.4% accuracy on the ABIDE I dataset by analyzing dynamic functional connectivity in rs-fMRI data [9].

- Data Preprocessing & DFC Construction:

- Preprocessing: Use standard rs-fMRI preprocessing pipelines (e.g., from FSL or SPM) for slice-timing correction, motion correction, and normalization.

- Sliding Window: Apply a sliding window (e.g., Hamming window) to the preprocessed BOLD time series to partition them into multiple segments. This step converts the data into multiple temporal windows.

- DFC Calculation: For each time window, compute a functional connectivity matrix (e.g., using Pearson correlation) between defined brain regions (ROIs). This results in a Dynamic Functional Connectivity (DFC) series for each subject.

- Model Architecture (GNN-LSTM):

- Satial Feature Extraction (GNN): Feed each DFC matrix (representing a brain graph) into a Graph Neural Network. The GNN learns to capture the spatial relationships and interactions between different brain regions at each time point.

- Temporal Sequence Modeling (LSTM): The sequence of node representations (or graph-level embeddings) output by the GNN across consecutive time windows is fed into an LSTM. The LSTM learns the temporal dependencies and dynamics of the brain network's evolution.

- Jump Connections: Implement jump connections between GNN-LSTM units to enhance information flow and capture features at different time scales, addressing the variable dependence of DFC on time scales [9].

- Dynamic Graph Pooling (DG-Pool): Use a dedicated pooling operation to aggregate the dynamic graph representations from all time windows into a final feature representation for classification.

Protocol: Fine-Tuning a Transformer for Text-Based Detection

This protocol is based on studies using transformer models like BERT and RoBERTa for detecting ASD from social media text [7].

- Data Preparation:

- Text Cleaning: Perform basic text cleaning (e.g., removing URLs, user mentions, and non-alphanumeric characters).

- Tokenization: Use the tokenizer specific to the pre-trained transformer model you select (e.g., BertTokenizer, RobertaTokenizer).

- Model Selection and Fine-Tuning:

- Base Model: Start with a pre-trained model like RoBERTa or BERT from Hugging Face's Transformers library.

- Classification Head: Add a custom classification layer (a linear layer) on top of the pre-trained model's [CLS] token output.

- Hyperparameters:

- Learning Rate: Use a small learning rate (e.g., 2e-5 to 5e-5) for fine-tuning to avoid catastrophic forgetting.

- Batch Size: Use the largest batch size that fits your GPU memory (e.g., 16, 32).

- Epochs: Typically 3-5 epochs are sufficient for fine-tuning, monitoring for overfitting on a validation set.

- Regularization:

- Use dropout in the final classification layer.

- Weight Decay can be applied as an additional regularizer.

Table 2: Key Resources for Deep Learning-based ASD Detection Research

| Resource Name / Type | Function / Application | Example Sources / Citations |

|---|---|---|

| ABIDE Dataset | A large-scale, publicly available repository of brain imaging (fMRI, sMRI) and phenotypic data from individuals with ASD and typically developing controls for training and validation. | [9] [13] [10] |

| Kaggle ASD Datasets | Hosts various datasets, including clinical trait data (e.g., from University of Arkansas) and facial image datasets for training models on non-imaging modalities. | [8] [12] [14] |

| Q-CHAT-10 Questionnaire | A 10-item screening tool for ASD in toddlers. Its score is frequently used as a key predictive feature in models trained on clinical/behavioral data. | [8] [15] |

| Pre-trained Transformer Models (e.g., BERT, RoBERTa) | Foundational NLP models that can be fine-tuned on domain-specific text (e.g., social media posts) for ASD classification, saving computational resources and time. | [7] |

| YOLOv11 Model | A state-of-the-art object detection model used for real-time analysis of video data to classify ASD-typical repetitive behaviors (e.g., hand flapping, body rocking). | [12] |

| SHAP (Shapley Additive Explanations) | An Explainable AI (XAI) library used to interpret the output of machine learning models, helping to identify which features (e.g., social responsiveness score) most influenced a diagnosis. | [16] |

| Tree-based Pipeline Optimization Tool (TPOT) | An Automated Machine Learning (AUTOML) tool that automatically designs and optimizes machine learning pipelines, useful for rapid model prototyping on structured data. | [15] |

Diagram Title: Architecture Selection Guide Based on Data Type

Frequently Asked Questions (FAQs)

Q1: What are hyperparameters and how do they differ from model parameters?

Hyperparameters are configuration variables whose values are set before the training process begins and control fundamental aspects of how a machine learning algorithm learns [17] [18]. Unlike model parameters (such as weights and biases in a neural network) that are learned automatically from the data during training, hyperparameters are not derived from data but are explicitly specified by the researcher [19] [20]. They act as the crucial "levers" that govern the learning process, influencing everything from model architecture to optimization behavior and convergence rates [19].

Q2: Which hyperparameters are most critical when tuning deep learning models for ASD detection?

In deep learning applications for Autism Spectrum Disorder (ASD) detection, several hyperparameters consistently demonstrate significant impact:

- Learning Rate: Controls step size during optimization; critically affects convergence in ASD detection models [19] [18]

- Batch Size: Influences training stability and generalization capability, particularly important when working with diverse ASD datasets [19]

- Number of Epochs: Determines training duration; essential for preventing overfitting on limited ASD data [19]

- Dropout Rate: Regularization parameter crucial for preventing overfitting in deep neural networks analyzing behavioral patterns [19] [18]

- Optimizer Selection: Algorithm choice (Adam, SGD, RMSprop) significantly impacts training efficiency for complex ASD detection tasks [19]

Q3: What optimization methods are most efficient for hyperparameter tuning in medical imaging applications like ASD detection?

For computationally intensive tasks like ASD detection, Bayesian optimization typically provides the best balance between efficiency and performance [21] [17] [20]. This method builds a probabilistic model of the objective function and uses it to select the most promising hyperparameters to evaluate next, dramatically reducing the number of experiments needed compared to exhaustive methods [19] [20]. When resources allow parallel computation, Population Based Training (PBT) and BOHB (Bayesian Optimization and HyperBand) offer excellent alternatives by combining multiple optimization strategies [21] [17].

Q4: How can researchers avoid overfitting during hyperparameter optimization for ASD diagnosis models?

The most effective strategy employs nested cross-validation, where an outer loop estimates generalization error while an inner loop performs the hyperparameter optimization [17]. This prevents information leakage from the validation set to the model selection process. Additionally, researchers should:

- Use separate validation and test sets that never influence training decisions [17]

- Implement early stopping based on validation performance [19]

- Apply appropriate regularization techniques (L1/L2, dropout) tuned via the optimization process [19] [18]

- Maintain completely independent test sets for final evaluation only [17]

Troubleshooting Guides

Problem: Model Performance Plateaus During Training

Symptoms: Validation metrics stop improving or fluctuate minimally across epochs; training loss decreases but validation loss stagnates.

Potential Causes and Solutions:

Inappropriate Learning Rate

- Diagnosis: Check if training loss changes very slowly or oscillates wildly

- Solution: Implement learning rate scheduling or reduction on plateau; try values between 0.1 and 1e-5 [19]

Insufficient Model Capacity

- Diagnosis: Both training and validation performance remain poor

- Solution: Increase model complexity (more layers/units) while monitoring for overfitting [18]

Vanishing/Exploding Gradients

- Diagnosis: Check gradient norms across layers; look for extreme values

- Solution: Use proper weight initialization strategies, batch normalization, or gradient clipping [19]

Problem: Model Overfits to Training Data

Symptoms: Excellent training performance with significantly worse validation/test performance; model memorizes training examples.

Potential Causes and Solutions:

Insufficient Regularization

Excessive Model Complexity

- Diagnosis: Model has significantly more parameters than training examples

- Solution: Reduce network depth/width, implement early stopping, or use stronger regularization [19]

Inadequate Training Data

- Diagnosis: Limited dataset size for complex ASD detection task

- Solution: Apply data augmentation techniques specific to your modality (images, signals, etc.) or investigate transfer learning [22]

Experimental Protocols for Hyperparameter Optimization

Protocol 1: Bayesian Optimization for DNN Architecture Search

This protocol is ideal for optimizing complex deep neural network architectures for ASD detection tasks where computational resources are limited and evaluation is expensive [19] [20].

Procedure:

- Define hyperparameter search spaces (learning rate: log-uniform [1e-5, 1e-1], dropout rate: uniform [0.1, 0.7], hidden units: [64, 128, 256, 512])

- Initialize with 10 random configurations, evaluate on validation set

- Build Gaussian process surrogate model mapping hyperparameters to validation accuracy

- For 50 iterations:

- Select next hyperparameters using acquisition function (Expected Improvement)

- Train model with selected hyperparameters

- Update surrogate model with results

- Return best performing configuration

Expected Outcomes: Research demonstrates Bayesian optimization can find optimal configurations in 50-100 evaluations that would require 1000+ trials with random search [20].

Protocol 2: Population Based Training for Adaptive Hyperparameter Tuning

PBT is particularly effective for deep learning models in ASD research where optimal hyperparameters may change during training [17].

Procedure:

- Initialize population of 10-20 models with random hyperparameters

- Train all models in parallel for 1000 steps each

- Every 100 steps:

- Rank models by validation performance

- Bottom 20% "exploit" by copying parameters from top 20%

- "Explore" by perturbing hyperparameters (learning rate ±20%, etc.)

- Continue until convergence or computational budget exhausted

Applications: Successfully applied to neural architecture search and reinforcement learning tasks, including DDPG frameworks for ASD intervention personalization [8] [17].

Table 1: Performance Comparison of Hyperparameter Optimization Methods

| Method | Computational Cost | Best Use Cases | Advantages | Limitations |

|---|---|---|---|---|

| Grid Search [21] [17] | High (exponential in parameters) | Small parameter spaces (<5 parameters) | Guaranteed to find best in grid; easily parallelized | Curse of dimensionality; inefficient for large spaces |

| Random Search [21] [17] | Medium (linear in iterations) | Medium to large parameter spaces | More efficient than grid; easily parallelized | No guarantee of optimality; may miss important regions |

| Bayesian Optimization [21] [19] [20] | Low (intelligent sampling) | Expensive evaluations; limited budget | Most efficient for costly functions; models uncertainty | Sequential nature limits parallelization; complex implementation |

| Population Based Training [21] [17] | Medium (parallel population) | Dynamic hyperparameter schedules | Adapts during training; combines parallel and sequential | Complex implementation; requires significant resources |

Table 2: Key Hyperparameters in ASD Detection Models

| Hyperparameter | Typical Range | Impact on Model | ASD-Specific Considerations |

|---|---|---|---|

| Learning Rate [19] | 1e-5 to 0.1 | Controls optimization step size; critical for convergence | Lower rates often needed for fine-tuning on limited ASD data |

| Batch Size [19] | 16 to 256 | Affects gradient stability and generalization | Smaller batches may help with diverse ASD presentation patterns |

| Dropout Rate [19] [18] | 0.1 to 0.7 | Regularization to prevent overfitting | Critical for models trained on limited ASD datasets |

| Number of Epochs [19] | 10 to 1000 | Training duration; balances under/overfitting | Early stopping essential given ASD dataset limitations |

| Hidden Units/Layers [18] | 64-1024 units; 2-10 layers | Model capacity and complexity | Deeper networks for complex ASD behavior patterns [8] |

Research Reagent Solutions

Table 3: Essential Computational Tools for Hyperparameter Optimization in ASD Research

| Tool/Resource | Function | Application in ASD Research |

|---|---|---|

| Optuna [20] | Bayesian optimization framework | Efficient hyperparameter search for DNN-based ASD detection |

| Scikit-learn [5] | Machine learning library with GridSearchCV and RandomizedSearchCV | Traditional ML models for ASD screening questionnaires |

| TensorFlow/PyTorch [19] | Deep learning frameworks | Building custom DNN architectures for ASD detection |

| Weights & Biases | Experiment tracking | Monitoring hyperparameter experiments across ASD datasets |

| ASD Datasets [8] [22] | Standardized behavioral data | Training and validating models (eye tracking, behavioral traits) |

Workflow Visualization

The Critical Link Between Hyperparameter Optimization and Diagnostic Accuracy

FAQs: Hyperparameter Optimization in ASD Diagnostic Models

Q1: Why is hyperparameter optimization particularly critical for ASD diagnosis compared to other machine learning applications?

In ASD diagnosis, model performance directly impacts healthcare outcomes. Optimized hyperparameters ensure the model accurately captures complex, heterogeneous behavioral patterns while avoiding overfitting to small or imbalanced clinical datasets. Research shows that proper tuning can increase diagnostic accuracy by over 4 percentage points, which translates to more reliable early detection and intervention opportunities [16].

Q2: What are the most effective hyperparameter optimization strategies for deep learning models analyzing behavioral data like eye-tracking or EEG?

For complex data modalities like eye-tracking and EEG, Bayesian optimization and multi-fidelity methods like Hyperband are most effective. Bayesian optimization efficiently navigates high-dimensional hyperparameter spaces for deep architectures (CNNs, Transformers), while Hyperband dynamically allocates resources to promising configurations, crucial given the computational expense of training on large time-series data [23] [24]. Population-based methods like PBT also show promise for adapting hyperparameters during training itself.

Q3: How can I diagnose if my ASD detection model is suffering from poor hyperparameter choices?

Key indicators include:

- High variance between training and validation performance, signaling overfitting. This is common with overly complex models on small biomedical datasets.

- Consistently low accuracy, precision, or recall across multiple training runs, even with different data splits [25].

- Training instability, such as exploding or vanishing gradients, often related to improper learning rate or batch size settings.

- Failure to converge within expected iterations, potentially due to poorly chosen optimizers or learning rate schedules.

Q4: What are the practical trade-offs between different optimization algorithms (e.g., Bayesian vs. Random Search) in clinical research settings?

Table: Comparison of Hyperparameter Optimization Methods

| Method | Computational Cost | Best For | Sample Efficiency | Implementation Complexity |

|---|---|---|---|---|

| Grid Search | Very High | Small search spaces (<5 parameters) | Low | Low |

| Random Search | High | Moderate search spaces | Medium | Low |

| Bayesian Optimization | Medium | Expensive model evaluations | High | Medium |

| Hyperband | Low-Medium | Deep learning with early stopping | Medium-High | Medium |

| Gradient-based | Low | Differentiable hyperparameters | High | High |

Random Search provides a good baseline and is often more efficient than Grid Search. Bayesian Optimization is preferable when model evaluation is costly (e.g., large neural networks), as it requires fewer iterations. For very resource-intensive training, multi-fidelity approaches like Hyperband provide the best practical results by early-stopping poorly performing trials [23] [24].

Troubleshooting Guides

Issue 1: Model Performance Saturation Despite Extensive Tuning

Symptoms: Metrics plateau across optimization trials; minimal improvement despite broad hyperparameter ranges.

Diagnosis and Resolution:

Evaluate Data Quality and Feature Relevance

- Check for redundant or irrelevant features using SHAP or permutation importance. In ASD diagnosis, social responsiveness scores and repetitive behavior scales are often top predictors [16].

- Ensure proper data preprocessing: normalize numerical features, handle missing values (imputation for clinical scores), and encode categorical variables (one-hot for ethnicity) [8].

Address Dataset Limitations

- ASD datasets often have limited samples and class imbalance. Apply synthetic minority over-sampling (SMOTE) or adjusted class weights in the loss function.

- Use transfer learning from pre-trained models on larger datasets, then fine-tune on specific ASD data [25].

Expand Model Capacity Judiciously

- Gradually increase model complexity (more layers/units) while monitoring for overfitting with regularization (L2, dropout).

- For tabular medical data, consider specialized architectures like TabPFN, which demonstrated 91.5% accuracy in ASD diagnosis versus 87.3% for XGBoost [16].

Issue 2: Inconsistent Results Across Different ASD Data Modalities

Symptoms: Model performs well on one data type (e.g., eye-tracking) but poorly on others (e.g., EEG or behavioral questionnaires).

Diagnosis and Resolution:

Modality-Specific Preprocessing

- EEG Signals: Apply bandpass filtering, remove artifacts, and extract spectral features[cite:9].

- Eye-Tracking: Calculate fixation duration, saccadic velocity, and scanpath patterns [22].

- Behavioral Scores: Normalize across different assessment scales (ADOS, SRS, Q-CHAT-10).

Customized Architecture Components

- Use CNNs for spatial patterns in eye-tracking heatmaps or EEG spectrograms.

- Employ RNNs/LSTMs for temporal dynamics in vocal analysis or movement sequences [26].

- Implement separate feature extraction branches for each modality before fusion.

Structured Hyperparameter Search Spaces

- Define separate search spaces for different modality handlers:

- CNN branches: filter sizes, channel depths, pooling strategies

- RNN branches: unit sizes, attention mechanisms

- Fusion layer: integration method (concatenation, weighted average)

- Define separate search spaces for different modality handlers:

Issue 3: Training Instability with Deep Architectures on Medical Data

Symptoms: Loss diverges to NaN; wild fluctuations in metrics; failure to converge.

Diagnosis and Resolution:

Gradient Management

- Implement gradient clipping (values between -1 and 1) to prevent explosion.

- Use batch normalization layers to maintain stable activations.

- Switch to more stable optimizers (Adam, Nadam) instead of basic SGD.

Learning Rate Optimization

- Start with smaller learning rates (1e-4 to 1e-3) and use learning rate scheduling.

- Apply warm-up periods where learning rate gradually increases initially.

- Implement adaptive learning rates with reduce-on-plateau scheduling.

Regularization Strategy

- Apply L2 regularization (weight decay) with values 1e-4 to 1e-3.

- Use dropout with rates 0.3-0.5 for dense layers, 0.1-0.2 for convolutional layers.

- Employ early stopping with patience of 10-20 epochs based on validation loss.

Quantitative Performance Data

Table: Impact of Hyperparameter Optimization on ASD Diagnostic Performance

| Model Architecture | Default Hyperparameters | Optimized Hyperparameters | Performance Improvement | Key Tuned Parameters |

|---|---|---|---|---|

| Deep Neural Network | 89.2% Accuracy | 96.98% Accuracy [8] | +7.78% | Learning rate (0.001), Hidden units (256, 128), Dropout (0.3) |

| TabPFNMix | 87.3% Accuracy (XGBoost baseline) | 91.5% Accuracy [16] | +4.2% | Ensemble size, Feature normalization, Tree depth |

| EEG-Based CNN | 88.5% Accuracy | 95.0% Accuracy [27] | +6.5% | Filter sizes, Learning rate decay, Batch size |

| Eye-Tracking MLP | 76% Accuracy | 81% Accuracy [22] | +5.0% | Hidden layers, Activation functions, Regularization |

Table: Optimization Algorithms and Their Empirical Performance in ASD Research

| Optimization Method | Average Trials to Convergence | Best Accuracy Achieved | Computational Efficiency | Stability |

|---|---|---|---|---|

| Manual Search | 15-20 trials | 89.5% | Low | Variable |

| Grid Search | 50-100+ trials | 91.2% | Very Low | High |

| Random Search | 30-50 trials | 92.8% | Medium | Medium |

| Bayesian Optimization | 20-30 trials | 96.98% [8] | High | High |

| Hyperband | 15-25 trials | 95.5% | Very High | Medium-High |

Experimental Protocols

Protocol 1: Comprehensive Hyperparameter Optimization for ASD Detection DNN

Objective: Systematically optimize deep neural network hyperparameters for robust ASD detection across multiple data modalities.

Materials:

- ASD dataset with behavioral assessments, eye-tracking, or EEG data

- Access to computational resources (GPU recommended)

- Optimization framework (Optuna, Ray Tune, or Weights & Biases)

Procedure:

Data Preparation Phase

- Collect and preprocess multimodal ASD data (e.g., Q-CHAT-10 scores, social responsiveness scales, eye-tracking metrics) [8].

- Split data into training (70%), validation (15%), and test (15%) sets, preserving class distribution.

- Apply modality-specific normalization: Z-score for behavioral scores, min-max for gaze coordinates.

Search Space Definition

- Define hierarchical search space:

- Architecture: layers (2-5), units (64-512), activation (ReLU, LeakyReLU, ELU)

- Optimization: learning rate (1e-5 to 1e-2), batch size (32-256), optimizer (Adam, Nadam, RMSprop)

- Regularization: dropout (0.1-0.5), L2 weight (1e-5 to 1e-3), batch normalization

- Define hierarchical search space:

Optimization Loop

- Initialize Bayesian optimization with TPESampler for 50 trials.

- For each trial, train model for 100 epochs with early stopping (patience=10).

- Evaluate on validation set using weighted F1-score (accounts for class imbalance).

- Track top 3 performing configurations for final ensemble.

Evaluation Phase

- Retrain top models on combined training+validation data.

- Evaluate final performance on held-out test set.

- Perform statistical significance testing (McNemar's test) between optimized and baseline.

Expected Outcomes: DNN with optimized hyperparameters should achieve >95% accuracy, >94% AUC-ROC on ASD detection tasks, significantly outperforming default configurations [8].

Protocol 2: Multimodal Fusion Architecture Tuning

Objective: Optimize hyperparameters for integrating multiple ASD diagnostic modalities (eye-tracking, EEG, behavioral scores).

Materials:

- Multimodal ASD dataset with synchronized recordings

- Deep learning framework with flexible architecture support

- Hyperparameter optimization library with parallel execution

Procedure:

Modality-Specific Processing

- Eye-tracking: Extract fixation maps, saccade patterns, and visual attention metrics [26].

- EEG: Compute spectral power bands, functional connectivity, and event-related potentials[cite:9].

- Behavioral: Encode ADOS sub-scores, SRS scales, and demographic factors.

Fusion Architecture Design

- Implement separate feature extractors for each modality.

- Define fusion search space:

- Fusion type (early, late, hierarchical)

- Attention mechanisms for weighted integration

- Cross-modality regularization strength

Joint Optimization Strategy

- Use multi-objective optimization balancing accuracy and model complexity.

- Apply gradient-based hyperparameter optimization where possible.

- Implement knowledge distillation from single-modality experts.

Expected Outcomes: Optimized multimodal fusion should outperform single-modality approaches by 5-15%, with particular improvements in specificity and early detection capability [26].

Workflow Visualization

Diagram 1: Hyperparameter Optimization Workflow for ASD Diagnosis

Diagram 2: Performance Impact of Hyperparameter Optimization

Research Reagent Solutions

Table: Essential Tools for Hyperparameter Optimization in ASD Research

| Tool/Category | Specific Solution | Function in ASD Research | Implementation Considerations |

|---|---|---|---|

| Optimization Frameworks | Optuna, Ray Tune, Weights & Biases | Automated hyperparameter search for DNNs diagnosing ASD | Choose based on parallelization needs and integration with deep learning frameworks |

| Data Modality Handlers | EEG: MNE-Python; Eye-tracking: PyGaze | Preprocess specific ASD behavioral data modalities | Ensure compatibility with optimization frameworks for end-to-end pipelines |

| Model Architecture Templates | TensorFlow/PyTorch DNN templates | Quick implementation of common architectures for ASD detection | Customize for specific data types (EEG, eye-tracking, behavioral scores) |

| Performance Monitoring | TensorBoard, MLflow | Track optimization progress and model metrics across trials | Essential for diagnosing optimization problems in complex ASD models |

| Clinical Validation Tools | SHAP, LIME | Explainability for clinical translation of ASD diagnostic models | Integrate with optimization to ensure interpretable models [16] |

Advanced Hyperparameter Optimization Methods and Their Practical Application

Frequently Asked Questions (FAQs)

Algorithm Selection & Theory

Q1: What are the core advantages of using meta-heuristic optimizers like PSO and GA over traditional methods for hyperparameter tuning in a complex domain like ASD detection?

Meta-heuristic algorithms provide significant advantages for complex optimization problems commonly encountered in medical diagnostics research, such as tuning machine learning models for Autism Spectrum Disorder (ASD) detection.

- Escaping Local Optima: Unlike gradient-based methods or exhaustive searches, meta-heuristics are less likely to become trapped in suboptimal local minima. They effectively navigate complex, high-dimensional search spaces where the relationship between hyperparameters and model performance is non-linear and noisy [28].

- Gradient-Free Optimization: PSO and GA do not require the objective function (e.g., model accuracy) to be differentiable. This is crucial when tuning hyperparameters of complex models like deep learning networks, where calculating a gradient is difficult [29].

- Superior Global Search: These algorithms are designed for broad exploration of the search space. For instance, PSO leverages a swarm of particles that share information to collectively converge on promising regions [30], while GA uses evolutionary principles like crossover and mutation to explore diverse solutions [31].

Q2: In the context of my ASD research, when should I choose Particle Swarm Optimization (PSO) over a Genetic Algorithm (GA), and vice versa?

The choice between PSO and GA depends on the specific nature of your optimization problem and computational constraints. The following table summarizes key differences and applications based on recent research.

Table 1: Comparative Guide: PSO vs. Genetic Algorithm

| Feature | Particle Swarm Optimization (PSO) | Genetic Algorithm (GA) |

|---|---|---|

| Core Inspiration | Social behavior of bird flocking/fish schooling [30] [29] | Biological evolution (natural selection) [28] [31] |

| Key Operators | Velocity and position updates guided by personal best (pbest) and global best (gbest) [30] |

Selection, Crossover (recombination), and Mutation [31] |

| Parameter Tuning | Inertia weight (w), cognitive (c1) and social (c2) coefficients [30] |

Population size, crossover rate, mutation rate, number of generations [31] |

| Typical Use-Case in ASD Research | Optimizing the hyperparameters of a single, complex model (e.g., a deep neural network) for ASD detection from clinical data [32]. | Feature selection combined with hyperparameter tuning, or when dealing with a mix of continuous and discrete hyperparameters [32] [33]. |

| Reported Performance | Can outperform other algorithms like the Gravitational Search Algorithm (GSA) in convergence rate and solution accuracy for certain problems [30]. | Effective for large, complex search spaces; may be slower than Grid Search but can find better solutions [31]. |

| Primary Strength | Faster convergence in many continuous problems; simpler implementation with fewer parameters [30]. | High flexibility; better at handling combinatorial problems and maintaining population diversity [28]. |

Q3: What is Pattern Search (PS) and how does it compare to population-based meta-heuristics?

Pattern Search (PS) is a direct search method that does not rely on a population of solutions like PSO or GA. It works by exploring points around a current center point according to a specific "pattern" (or mesh). If a better point is found, it becomes the new center; otherwise, the mesh size is reduced to refine the search [34]. It is a deterministic, local search algorithm, making it highly suitable for fine-tuning solutions in continuous parameter spaces after a global optimizer like PSO or GA has identified a promising region.

Implementation & Troubleshooting

Q4: My PSO implementation is converging to a suboptimal solution too quickly. What are the primary parameters to adjust to prevent this premature convergence?

Premature convergence in PSO often indicates an imbalance between exploration (searching new areas) and exploitation (refining known good areas). Focus on adjusting these key parameters [30]:

- Inertia Weight (

w): Increase the value ofw(e.g., from 0.8 to 0.9 or higher) to promote global exploration by giving particles more momentum to escape local optima. - Social Coefficient (

c2): Temporarily lowerc2relative to the cognitive coefficient (c1) to reduce the "herding" effect and encourage particles to explore their own path rather than immediately rushing toward thegbest. - Swarm Topology: Change from a global best (star) topology to a local best (ring) topology. This slows down the propagation of information across the swarm, helping to maintain diversity for longer [30].

Q5: I am using a GA for hyperparameter optimization, but the performance improvement has plateaued over several generations. What strategies can I employ?

A performance plateau suggests a lack of diversity in the genetic population. To address this:

- Increase Mutation Rate: Strategically increase the mutation probability to introduce new genetic material and push the search into unexplored regions of the hyperparameter space [31].

- Review Selection Pressure: If using a strong selection method (e.g., only picking the very best), consider implementing methods like tournament selection or rank-based selection, which provide a chance for weaker (but potentially valuable) individuals to reproduce.

- Algorithm Hybridization: Consider hybridizing your GA. For example, you can integrate a local search technique like Pattern Search to fine-tune the best individuals in each generation, a method known as memetic algorithms.

Q6: A common critique of meta-heuristics is their computational expense. How can I make the optimization process more efficient?

Computational expense is a valid concern, but several strategies can improve efficiency:

- Use a Surrogate Model: Replace the expensive objective function (e.g., training a full deep learning model) with a cheaper-to-evaluate surrogate model (like a Gaussian Process) during the optimization process [32].

- Parallelization: Both PSO and GA are embarrassingly parallel. The evaluation of each particle (PSO) or individual (GA) can be distributed across multiple CPUs or cores, drastically reducing wall-clock time [29].

- Set Intelligent Limits: Define sensible bounds for your hyperparameter search space based on domain knowledge and early pilot experiments to avoid wasting time in irrelevant regions.

Experimental Protocols & Workflows

Detailed Methodology: Hyperparameter Optimization for an ASD Detection Model

This protocol outlines a methodology similar to one successfully used for respiratory disease diagnosis, adapted for an ASD detection task using a genetic algorithm for hyperparameter optimization and feature selection [32].

1. Problem Identification & Data Preparation:

- Objective: Develop a predictive model for ASD screening using clinical or image-based data (e.g., from the Kaggle Autistic Children Data Set or UCI ASD screening repositories) [33] [35].

- Data Preprocessing: Handle missing values, encode categorical variables, and normalize numerical features. For image data, apply augmentation techniques to increase dataset size and robustness [35].

- Data Splitting: Split the data into training, validation, and test sets. The validation set is used to guide the hyperparameter optimization process.

2. Hyperparameter Optimization with Genetic Algorithm:

- Define the Search Space: Specify the hyperparameters to be tuned and their value ranges (e.g., learning rate:

[0.0001, 0.1], number of layers:[2, 5], neurons per layer:[32, 512]). - Initialize Population: Generate an initial population of individuals, where each individual is a unique set of hyperparameters [31].

- Fitness Evaluation: For each individual in the population, train a model (e.g., a deep learning classifier) with its hyperparameters and evaluate its performance on the validation set. Use a metric like Accuracy, F1-Score, or AUC as the fitness score [32] [31].

- Selection, Crossover, and Mutation:

- Iterate: Form a new generation from the offspring and repeat the process for a predefined number of generations or until convergence [31].

3. Feature Selection (Concurrently or Sequentially):

- To reduce dimensionality and improve model interpretability, employ a feature selection algorithm like Binary Grey Wolf Optimization (BGWO) [32]. This treats feature selection as a separate optimization problem, aiming to maximize accuracy while minimizing the number of features used.

4. Final Model Training & Evaluation:

- Train your final model (or an ensemble of top-performing models [32]) on the full training set using the best-found hyperparameters and feature subset.

- Perform a final, unbiased evaluation on the held-out test set to report the model's generalized performance.

5. Model Interpretation:

- Apply Explainable AI (XAI) techniques such as SHapley Additive exPlanations (SHAP) to understand the contribution of each feature to the model's predictions, which is critical for clinical acceptance [32].

Diagram 1: GA Hyperparameter Optimization Workflow for ASD Detection.

The Scientist's Toolkit: Key Research Reagents & Solutions

Table 2: Essential Components for a Meta-heuristic Optimization Pipeline in ASD Research

| Item / Solution | Function / Purpose | Example / Note |

|---|---|---|

| Clinical / Image Datasets | The foundational data for training and validating ASD detection models. | Kaggle Autistic Children Data Set (images) [35], UCI ASD Screening repositories (numerical) [35], Q-CHAT-10 [33]. |

| Meta-heuristic Library | Provides pre-implemented, tested optimization algorithms. | Mealpy (Python) offers a wide assortment of algorithms like PSO, GA, and others [36]. |

| Machine Learning Framework | Enables the building and training of predictive models. | Scikit-learn for traditional ML, TensorFlow or PyTorch for deep learning. |

| Performance Metrics | Quantifies the effectiveness of the tuned model. | Accuracy, Precision, Recall, F1-Score, AUC-ROC. Critical for clinical evaluation [32] [33]. |

| Explainable AI (XAI) Tool | Interprets model decisions, building trust and providing clinical insights. | SHAP (SHapley Additive exPlanations) to determine feature importance [32]. |

| Computational Resources | Hardware to handle the intensive process of repeated model training. | Multi-core CPUs or GPUs for parallel evaluation of populations [29]. |

Diagram 2: PSO Swarm Topologies Affecting Information Flow.

Troubleshooting Guide & FAQs

This section addresses common challenges researchers face when implementing the Multi-Strategy Parrot Optimizer (MSPO) for hyperparameter tuning in deep learning models, specifically within the context of Autism Spectrum Disorder (ASD) diagnosis research.

Frequently Asked Questions

Q1: The MSPO algorithm converges to a sub-optimal solution too quickly in my ASD diagnosis model. How can I improve global exploration?

- A: Premature convergence often indicates insufficient population diversity or a lack of effective exploration mechanisms. The MSPO integrates several strategies to combat this [37] [38]. First, verify the implementation of the Sobol sequence for population initialization, which provides a more uniform distribution of initial candidate solutions than purely random initialization. Second, ensure the non-linear decreasing inertia weight is correctly calibrated; this weight should be higher in early iterations to encourage exploration of the search space and gradually decrease to refine solutions later. Finally, the inclusion of a chaotic parameter can help the algorithm escape local optima by introducing structured yet unpredictable movement during the search process [38].

Q2: The convergence rate of my MSPO implementation is slower than expected. What parameters should I adjust?

- A: Slow convergence can be related to the balance between exploration and exploitation. Focus on the adaptive weight factors. In the related AWTPO algorithm, adaptive weights (ω1 and ω2) are designed based on iterative behavior to replace the original random exploitation strategy, thereby improving this balance and convergence efficiency [39]. Review the design of your non-linear decreasing inertia weight to ensure it does not force prolonged exploration at the cost of refinement. Additionally, consider integrating a local elite preservation mechanism, which helps retain the best solutions found and accelerates convergence towards promising regions [39].

Q3: How can I validate that my MSPO implementation is performing correctly before applying it to my core ASD research?

- A: Always begin with benchmark validation. The performance of MSPO and its variants is rigorously tested on standard benchmark suites like CEC 2022 [37] [38] and CEC2017 [40]. Run your algorithm on these established functions and compare your results against the published data. Furthermore, conduct an ablation study on your own code. This involves creating variants of MSPO, each with one key strategy (like chaotic maps or Sobol sequences) disabled. Comparing the performance of these variants against the full MSPO will validate the effectiveness of each component in your specific setup [37].

Q4: The optimized hyperparameters from MSPO do not generalize well to my unseen ASD validation dataset. What could be wrong?

- A: This is typically a sign of overfitting to the training set during the hyperparameter search. The fitness function used in the optimization loop must reflect the ultimate goal of generalization. Instead of using pure training accuracy, implement a cross-validation strategy within the fitness evaluation. The objective should be to maximize accuracy on a held-out validation set, not the training set. This ensures the hyperparameters selected by MSPO promote model robustness rather than just memorization.

Quantitative Performance Data

The following tables summarize key quantitative data from experiments with MSPO and related multi-strategy Parrot Optimizer variants, providing benchmarks for expected performance.

Table 1: Summary of Multi-Strategy PO Variants and Their Core Enhancements

| Algorithm Acronym | Full Name | Core Improvement Strategies | Primary Application Context |

|---|---|---|---|

| MSPO [37] [38] | Multi-Strategy Parrot Optimizer | Sobol sequence, Non-linear decreasing inertia weight, Chaotic parameter [37] [38]. | Hyperparameter optimization for breast cancer image classification [37] [38]. |

| CGBPO [40] | Chaotic–Gaussian–Barycenter Parrot Optimization | Chaotic logistic mapping, Gaussian mutation, Barycenter opposition-based learning [40]. | General benchmark testing (CEC2017, CEC2022) and engineering problems [40]. |

| AWTPO [39] | A multi-strategy enhanced chaotic parrot optimization algorithm | 2D Arnold chaotic map, Adaptive weight factors, Cauchy–Gaussian hybrid mutation [39]. | Engineering design optimization (e.g., gear reducers) [39]. |

| CPO [41] | Chaotic Parrot Optimizer | Integration of ten different chaotic maps into the Parrot Optimizer [41]. | Engineering problem solving and medical image segmentation [41]. |

Table 2: Performance Metrics on Public Benchmarks

| Algorithm | Benchmark Suite | Key Performance Outcome | Compared Against |

|---|---|---|---|

| MSPO [37] | CEC 2022 | Surpassed leading algorithms in optimization precision and convergence rate [37]. | Other swarm intelligence algorithms [37]. |

| CGBPO [40] | CEC2017 & CEC2022 | Outperformed 7 other algorithms in convergence speed, solution accuracy, and stability [40]. | PO, other metaheuristics [40]. |

| CPO [41] | 23 classic functions & CEC 2019/2020 | Outperformed the original PO and 6 other recent metaheuristics in convergence speed and solution quality [41]. | GWO, WOA, SCA, etc. [41]. |

Experimental Protocols

Below is a detailed methodology for implementing and testing the MSPO algorithm for hyperparameter optimization, framed within a deep learning pipeline for ASD diagnosis.

Protocol 1: Implementing the MSPO for Hyperparameter Tuning

Problem Formulation:

- Search Space Definition: Define the hyperparameter search space for your deep learning model (e.g., learning rate ∈ [1e-5, 1e-2], dropout rate ∈ [0.1, 0.7], number of layers ∈ [2, 10]).

- Fitness Function: The fitness of a parrot (candidate solution) is the performance of a model trained with its hyperparameters. Use a robust metric like 5-fold cross-validation accuracy on the training/validation data to prevent overfitting.

Algorithm Initialization:

- Population: Initialize a population of

Ncandidate solutions (parrots) using the Sobol sequence to ensure low discrepancy and good coverage of the search space [37] [38]. - Parameters: Set algorithm parameters, including maximum iterations (

Max_iter), and the parameters for the non-linear decreasing inertia weight.

- Population: Initialize a population of

Main Optimization Loop: For each iteration until

Max_iteris reached:- Fitness Evaluation: Train and evaluate the deep learning model for each candidate's hyperparameters to compute its fitness.

- Behavior Selection & Update: For each parrot, stochastically select one of the four core behaviors (foraging, staying, communicating, fear of strangers) and update its position using the corresponding equations [40].

- Strategy Application:

- Elite Preservation: Identify and retain the current global best solution.

Termination and Output:

- Once the stopping criterion is met (e.g.,

Max_iter), output the global best solution, which represents the optimized set of hyperparameters for the ASD diagnosis model.

- Once the stopping criterion is met (e.g.,

The following workflow diagram illustrates this protocol and its integration into a deep learning pipeline.

The Scientist's Toolkit: Research Reagent Solutions

This table details key computational "reagents" and their functions for implementing the MSPO in a research environment.

Table 3: Essential Components for MSPO Experimentation

| Item Name | Function / Purpose | Application Note |

|---|---|---|

| Sobol Sequence | A quasi-random number generator for population initialization. Produces a more uniform distribution than pseudo-random sequences, improving initial search space coverage [37] [38]. | Use for initializing the population of candidate hyperparameter sets to ensure a thorough initial exploration. |

| Non-linear Decreasing Inertia Weight | A parameter that dynamically balances exploration and exploitation. Starts with a high value to promote global search and decreases non-linearly to focus on local refinement [37] [38]. | Critical for controlling convergence behavior. Must be tuned to the specific problem. |

| Chaotic Map (e.g., Logistic, Arnold) | A deterministic system that produces ergodic, non-periodic behavior. Used to introduce structured stochasticity, helping the algorithm escape local optima [39] [38] [41]. | Can be applied to perturb particle positions or modulate parameters during the search process. |

| CEC Benchmark Suites | A collection of standardized test functions (e.g., CEC2017, CEC2022) for rigorously evaluating and comparing optimization algorithm performance [40] [37]. | Essential for validating the correctness and performance of any new MSPO implementation before applying it to domain-specific problems. |

| Opposition-Based Learning | A strategy that considers the opposite of current solutions. Generating solutions based on the population's barycenter can guide the search toward more promising regions of the space [40]. | Used in variants like CGBPO to enhance learning efficiency and solution quality. |

| Adaptive Mutation (e.g., Cauchy-Gaussian) | A hybrid mutation strategy that uses the long-tailed Cauchy distribution for large jumps and the Gaussian distribution for fine-tuning. Helps maintain population diversity and avoid premature convergence [39]. | Applied to the best solutions to create new, perturbed candidates, balancing exploration around promising areas. |

AutoML Troubleshooting Guide

Data Quality Issues

Problem: Model performance is poor due to data quality problems

- Symptoms: Low accuracy metrics, inconsistent predictions across similar inputs, training errors

- Solution: Implement comprehensive data validation checks before AutoML processing

- Protocol:

- Check for missing values using statistical analysis

- Validate data distributions across training and validation splits

- Identify and remove outliers using interquartile range methods

- Ensure consistent data formats and scales across all features

Problem: Data leakage between training and validation sets

- Symptoms: Unusually high validation performance that drops significantly in production

- Solution: Implement strict temporal or categorical splitting strategies

- Protocol:

- Use grouped cross-validation for patient data in medical studies

- Ensure time-series data uses forward-chaining validation

- Verify no patient appears in both training and test sets for ASD diagnosis research

Model Performance Problems

Problem: AutoML selects overly complex models that are difficult to interpret

- Symptoms: Black box models with poor explainability, resistance from clinical stakeholders

- Solution: Configure AutoML for interpretability constraints and use explainable AI techniques

- Protocol:

- Enable interpretability flags in AutoML configuration

- Set complexity penalties in model selection criteria

- Generate SHAP or LIME explanations for clinical validation

- Prioritize models with inherent interpretability (linear models, decision trees) for initial deployment

Problem: Hyperparameter tuning consumes excessive computational resources

- Symptoms: Long training times, escalating cloud computing costs, delayed experiments

- Solution: Implement efficient hyperparameter optimization strategies

- Protocol:

- Use Bayesian optimization instead of grid search for large parameter spaces [42]

- Apply early stopping mechanisms like Hyperband to terminate underperforming jobs [42]

- Limit simultaneous parallel jobs based on available resources

- Use random search for highly parallelizable exploration of hyperparameter space [42]

Technical Implementation Issues

Problem: Reproducibility challenges in AutoML experiments

- Symptoms: Inconsistent results between identical runs, difficulty replicating published results

- Solution: Implement comprehensive reproducibility protocols

- Protocol:

- Set random seeds for all stochastic processes [42]

- Version control all data, code, and configuration files

- Use containerization for consistent runtime environments

- Document all AutoML framework version dependencies

Frequently Asked Questions

General AutoML Questions

Q: What is AutoML and how does it differ from traditional machine learning? A: Automated Machine Learning (AutoML) automates the end-to-end process of building machine learning models, including data preprocessing, feature engineering, model selection, and hyperparameter tuning [43]. Unlike traditional ML which requires manual execution of each step, AutoML systematically searches through combinations of algorithms and parameters to find optimal solutions automatically [44].

Q: When should researchers use AutoML versus manual machine learning approaches? A: Use AutoML for rapid prototyping, when working with standard data types, or when team expertise in ML is limited. Prefer manual approaches for novel architectures, highly specialized domains requiring custom solutions, or when maximal control and interpretability are required [43].

AutoML for ASD Research Questions

Q: How can AutoML be applied to Autism Spectrum Disorder (ASD) diagnosis research? A: AutoML can automate the development of models for ASD diagnosis using various data sources including behavioral assessments [45], brain imaging data [45], and clinical records. Research has demonstrated successful application of AutoML techniques to optimize models using tools like AQ-10 assessments with reduced feature sets while maintaining diagnostic accuracy [45].

Q: What are the specific challenges of using AutoML in medical diagnostics like ASD? A: Key challenges include ensuring model interpretability for clinical adoption, managing small or imbalanced datasets common in medical research, addressing privacy concerns with patient data, validating models against clinical gold standards, and meeting regulatory requirements for medical devices [43] [45].

Technical Implementation Questions

Q: What hyperparameter tuning strategy should I use for my ASD research project? A: For large jobs, use Hyperband with early stopping mechanisms. For smaller training jobs, use Bayesian optimization or random search [42]. Bayesian optimization uses information from prior runs to improve subsequent configurations, while random search enables massive parallelism [42].

Q: How many hyperparameters should I optimize simultaneously in AutoML? A: Limit your search space to the most impactful hyperparameters. Although you can specify up to 30 hyperparameters, focusing on a smaller number of critical parameters reduces computation time and allows faster convergence to optimal configurations [42].

Experimental Protocols & Methodologies

ASD Diagnosis Using Machine Learning

Protocol Title: Automated ASD Diagnosis Using Behavioral Assessment Data

Background: Autism Spectrum Disorder affects approximately 2.20% of children according to DSM-5 criteria, with early diagnosis being crucial for intervention effectiveness [45].

Materials:

- AQ-10 (Autism Quotient) assessment data

- Demographic and clinical characteristic data

- Machine learning platform with AutoML capabilities

Methodology:

- Data Collection: Gather dataset containing AQ-10 assessment scores and individual characteristics (n=701 samples after preprocessing) [45]

- Data Preprocessing: Handle missing values, encode categorical variables, normalize numerical features

- Feature Selection: Apply Recursive Feature Elimination (RFE) to identify most predictive features

- Model Training: Train multiple classifiers (ANN, SVM, Random Forest) using 75% of data [45]

- Validation: Test models on remaining 25% of data using appropriate metrics (accuracy, F1-score, ROC curves)

- Deployment: Implement best-performing model for diagnostic assistance

Expected Outcomes: Research has demonstrated accuracy up to 98% with reduced feature sets in similar studies [45].

Research Reagent Solutions

Table: Essential Components for AutoML in ASD Research

| Research Reagent | Function | Implementation Example |

|---|---|---|

| Data Preprocessing Tools | Clean and prepare raw data for modeling | Automated handling of missing values, outlier detection, data normalization [43] [44] |

| Feature Engineering Algorithms | Transform raw data into informative features | Automated feature creation, selection of most predictive features from behavioral assessments [43] [45] |

| Model Selection Framework | Identify optimal algorithm for specific task | Simultaneous testing of multiple algorithms (SVM, Random Forest, ANN) [43] [45] |

| Hyperparameter Optimization | Tune model parameters for maximum performance | Bayesian optimization, random search, or Hyperband strategies [43] [42] |

| Model Validation Metrics | Evaluate model performance and generalizability | Cross-validation, confusion matrices, F1-scores, ROC analysis [43] [45] |

| Explainability Tools | Interpret model decisions for clinical validation | SHAP, LIME, feature importance rankings for clinician trust [43] |

Advanced Hyperparameter Tuning Diagram

Model Evaluation Framework

Table: Performance Metrics for ASD Diagnosis Models

| Metric | Formula | Interpretation | ASD Research Application |

|---|---|---|---|

| Accuracy | (TP+TN)/(TP+TN+FP+FN) | Overall correctness | General diagnostic performance [45] |

| Sensitivity | TP/(TP+FN) | Ability to detect true cases | Crucial for minimizing missed ASD diagnoses [45] |

| Specificity | TN/(TN+FP) | Ability to exclude non-cases | Important for avoiding false alarms [45] |

| F1-Score | 2×(Precision×Recall)/(Precision+Recall) | Balance of precision and recall | Overall measure when class balance matters [45] |

| AUC-ROC | Area under ROC curve | Overall discriminatory power | Model performance across thresholds [45] |

Hyperparameter Tuning for Diverse Data Types in Deep Learning ASD Diagnosis Research

Frequently Asked Questions & Troubleshooting Guides

This technical support resource addresses common challenges in hyperparameter tuning for deep learning models in Autism Spectrum Disorder (ASD) research, focusing on health registries, EEG, and behavioral metrics data.

How should I preprocess EEG signals for optimal ASD classification, and which metrics confirm signal quality?

Problem: Researchers report inconsistent ASD classification results despite using standard EEG preprocessing pipelines. The relationship between preprocessing choices and downstream model performance is unclear.

Solution: Implement and quantitatively compare multiple preprocessing techniques using standardized evaluation metrics. Select the method that best balances denoising effectiveness with feature preservation for your specific research objectives [46].

Experimental Protocol:

- Data Acquisition: Collect resting-state EEG recordings using a minimum 16-channel system. Ensure participant groups include confirmed ASD diagnoses and neurotypical controls [46].

- Preprocessing Application: Process raw EEG data through three parallel pipelines:

- Butterworth Bandpass Filter: Apply a [0.5, 40] Hz bandpass filter to retain key neural oscillation bands (delta, theta, alpha, beta) [46].

- Discrete Wavelet Transform (DWT): Decompose signals into frequency sub-bands to separate neural activity from noise [46].

- Independent Component Analysis (ICA): Identify and remove artifactual components (e.g., eye blinks, muscle activity) [46].

- Metric Calculation: Compute the following metrics for each preprocessing output compared to a clean reference signal [46]:

| Metric | Purpose | Interpretation |

|---|---|---|

| Signal-to-Noise Ratio (SNR) | Measures signal clarity against background noise. | Higher values (e.g., ICA: 86.44 for normal, 78.69 for ASD) indicate superior denoising [46]. |

| Mean Absolute Error (MAE) | Quantifies average magnitude of errors. | Lower values (e.g., DWT: 4785.08 for ASD) indicate less signal distortion [46]. |

| Mean Squared Error (MSE) | Quantifies average squared errors, emphasizing large errors. | Lower values (e.g., DWT: 309,690 for ASD) indicate robust feature preservation [46]. |

| Spectral Entropy (SE) | Assesses complexity/unpredictability of the power spectrum. | Reflects cognitive and neural state variations [46]. |

| Hjorth Parameters | Describe neural dynamics in the time domain. | Activity (signal power), Mobility (frequency variability), Complexity (irregularity). Neurotypical EEGs often show higher activity and complexity [46]. |

Troubleshooting Guide:

- Poor Final Classification Accuracy: If your model fails to classify accurately, ensure you are using the optimal preprocessing method for your goal. ICA is best for signal clarity, while DWT offers a better balance for feature preservation [46].

- Model Overfitting on EEG Data: Check Hjorth parameters. Significantly different complexity values between groups can serve as robust features, potentially reducing overfitting compared to raw spectral data [46].

Problem: Tuning models on combined data types (e.g., categorical health registry data and continuous behavioral scores) leads to unstable training and failed convergence.

Solution: Adopt a scientific, incremental tuning strategy that classifies hyperparameters based on their role and systematically investigates their interactions [47].

Experimental Protocol:

- Categorize Hyperparameters: For your experimental goal, define:

- Scientific Hyperparameters: The core factors you are trying to study (e.g., number of model layers, type of data fusion method) [47].

- Nuisance Hyperparameters: Those that must be optimized to ensure a fair comparison of scientific parameters (e.g., learning rate, optimizer parameters). These often interact strongly with other changes [47].

- Fixed Hyperparameters: Those held constant for the experiment due to resource constraints or prior evidence (e.g., activation function, batch size), acknowledging this limits the generality of your conclusions [47].

- Design a Tuning Round:

- Goal: "Determine the optimal number of dense layers in the final classifier when integrating EEG features and behavioral metrics."

- Scientific:

num_dense_layers = [1, 2, 3] - Nuisance:

learning_rate(log-uniform from 1e-5 to 1e-2),dropout_rate(uniform from 0.1 to 0.5) - Fixed:

optimizer="Adam",batch_size=32

- Execute and Analyze: Use a Bayesian optimization tool like Optuna to efficiently search the nuisance space for each value of the scientific hyperparameter. Analyze the validation performance of the best model for each layer count [48].

Troubleshooting Guide:

- Unstable Training Curves: This is frequently caused by an untuned learning rate or other optimizer parameters. Reclassify learning rate as a nuisance parameter and tune it separately for each major architectural change (scientific parameter) [47].

- Conflicting Results Between Studies: Often due to fixing a hyperparameter that has a significant interaction with the scientific parameter. For example, fixing the weight decay strength when comparing model sizes can lead to incorrect conclusions. Re-run the experiment, treating the interacting parameter as a nuisance [47].

How can I implement real-time detection of ASD-related behaviors from video, and what architecture delivers the best performance?

Problem: Traditional manual observation of behaviors like hand flapping and body rocking is time-consuming and subjective. An automated, real-time solution is needed.

Solution: Implement a multi-layered system based on the YOLOv11 deep learning model for real-time body movement analysis [12].

Experimental Protocol:

- Dataset Curation: Collect and annotate a video dataset. A benchmark dataset includes 72 videos, yielding 13,640 images across four classes:

hand_flapping,body_rocking,head_shaking, andnon_autistic. Validation by certified autism specialists is crucial for ground truth [12]. - System Architecture: Implement a pipeline with four layers:

- Monitoring Layer: Captures live video stream from a camera.

- Network Layer: Handles wireless data transfer to a processing unit.

- Cloud Layer: Performs the core model inference.

- ASD-Typical Behavior Detection Layer: Runs the YOLOv11 model to classify behaviors in the video frames [12].

- Model Training and Evaluation: Train the YOLOv11 model, leveraging its EfficientRepNet backbone and C2PSA modules. Compare its performance against baseline models like CNN (MobileNet-SSD) and LSTM using standard metrics [12].

| Model | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|

| YOLOv11 (Proposed) | 99% | 96% | 97% | 97% |

| CNN (MobileNet-SSD) | Lower | Lower | Lower | Lower |

| LSTM | Lower | Lower | Lower | Lower |

Table: Performance comparison for ASD-typical behavior detection, adapted from [12].

Troubleshooting Guide: